- Home

- HP

- Aruba-ACNSA

- HPE6-A78

- Aruba Certified Network Security Associate Exam Questions and Answers

HPE6-A78 Aruba Certified Network Security Associate Exam Questions and Answers

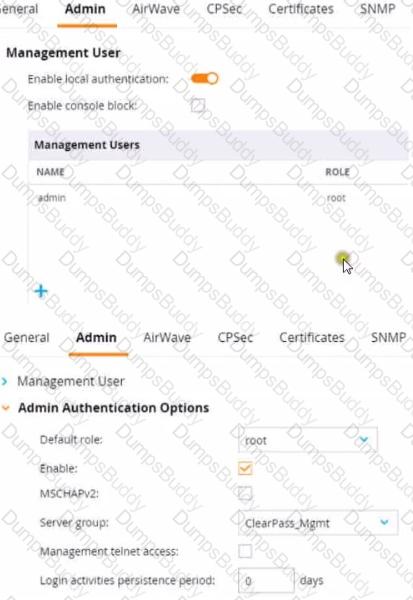

Refer to the exhibit.

This Aruba Mobility Controller (MC) should authenticate managers who access the Web Ul to ClearPass Policy Manager (CPPM) ClearPass admins have asked you to use RADIUS and explained that the MC should accept managers' roles in Aruba-Admin-Role VSAs

Which setting should you change to follow Aruba best security practices?

Options:

Change the local user role to read-only

Clear the MSCHAP check box

Disable local authentication

Change the default role to "guest-provisioning"

Answer:

CExplanation:

For following Aruba best security practices, the setting you should change is to disable local authentication. When integrating with an external RADIUS server like ClearPass Policy Manager (CPPM) for authenticating administrative access to the Mobility Controller (MC), it is a best practice to rely on the external server rather than the local user database. This practice not only centralizes the management of user roles and access but also enhances security by leveraging CPPM's advanced authentication mechanisms.

Your company policies require you to encrypt logs between network infrastructure devices and Syslog servers. What should you do to meet these requirements on an ArubaOS-CX switch?

Options:

Specify the Syslog server with the TLS option and make sure the switch has a valid certificate.

Specify the Syslog server with the UDP option and then add an CPsec tunnel that selects Syslog.

Specify a priv key with the Syslog settings that matches a priv key on the Syslog server.

Set up RadSec and then enable Syslog as a protocol carried by the RadSec tunnel.

Answer:

AExplanation:

To ensure secure transmission of log data over the network, particularly when dealing with sensitive or critical information, using TLS (Transport Layer Security) for encrypted communication between network devices and syslog servers is necessary:

Secure Logging Setup: When configuring an ArubaOS-CX switch to send logs securely to a Syslog server, specifying the server with the TLS option ensures that all transmitted log data is encrypted. Additionally, the switch must have a valid certificate to establish a trusted connection, preventing potential eavesdropping or tampering with the logs in transit.

Other Options:

Option B, Option C, and Option D are less accurate or applicable for directly encrypting log data between the device and Syslog server as specified in the company policies.

A user attempts to connect to an SSID configured on an AOS-8 mobility architecture with Mobility Controllers (MCs) and APs. The SSID enforces WPA3-Enterprise security and uses HPE Aruba Networking ClearPass Policy Manager (CPPM) as the authentication server. The WLAN has initial role, logon, and 802.1X default role, guest.

A user attempts to connect to the SSID, and CPPM sends an Access-Accept with an Aruba-User-Role VSA of "contractor," which exists on the MC.

What does the MC do?

Options:

Applies the rules in the logon role, then guest role, and the contractor role

Applies the rules in the contractor role

Applies the rules in the contractor role and the logon role

Applies the rules in the contractor role and guest role

Answer:

BExplanation:

In an AOS-8 mobility architecture, the Mobility Controller (MC) manages user roles and policies for wireless clients connecting to SSIDs. When a user connects to an SSID with WPA3-Enterprise security, the MC uses 802.1X authentication to validate the user against an authentication server, in this case, HPE Aruba Networking ClearPass Policy Manager (CPPM). The SSID is configured with specific roles:

Initial role: Applied before authentication begins (not specified in the question, but typically used for pre-authentication access).

Logon role: Applied during the authentication process to allow access to authentication services (e.g., DNS, DHCP, or RADIUS traffic).

802.1X default role (guest): Applied if 802.1X authentication fails or if no specific role is assigned by the RADIUS server after successful authentication.

In this scenario, the user successfully authenticates, and CPPM sends an Access-Accept message with an Aruba-User-Role Vendor-Specific Attribute (VSA) set to "contractor." The "contractor" role exists on the MC, meaning it is a predefined role in the MC’s configuration.

When the MC receives the Aruba-User-Role VSA, it applies the specified role ("contractor") to the user session, overriding the default 802.1X role ("guest"). The MC does not combine the contractor role with other roles like logon or guest; it applies only the role specified by the RADIUS server (CPPM) in the Aruba-User-Role VSA. This is the standard behavior in AOS-8 for role assignment after successful authentication when a VSA specifies a role.

Option A, "Applies the rules in the logon role, then guest role, and the contractor role," is incorrect because the MC does not apply multiple roles in sequence. The logon role is used only during authentication, and the guest role (default 802.1X role) is overridden by the contractor role specified in the VSA.

Option C, "Applies the rules in the contractor role and the logon role," is incorrect because the logon role is no longer applied once authentication is complete; only the contractor role is applied.

Option D, "Applies the rules in the contractor role and guest role," is incorrect because the guest role (default 802.1X role) is not applied when a specific role is assigned via the Aruba-User-Role VSA.

The HPE Aruba Networking AOS-8 8.11 User Guide states:

"When a user authenticates successfully via 802.1X, the Mobility Controller applies the role specified in the Aruba-User-Role VSA returned by the RADIUS server in the Access-Accept message. If the role specified in the VSA exists on the controller, it is applied to the user session, overriding any default 802.1X role configured for the WLAN. The controller does not combine the VSA-specified role with other roles, such as the initial, logon, or default roles." (Page 305, Role Assignment Section)

Additionally, the HPE Aruba Networking ClearPass Policy Manager 6.11 User Guide notes:

"ClearPass can send the Aruba-User-Role VSA in a RADIUS Access-Accept message to assign a specific role to the user on Aruba Mobility Controllers. The role specified in the VSA takes precedence over any default roles configured on the WLAN, ensuring that the user is placed in the intended role." (Page 289, RADIUS Enforcement Section)

You have detected a Rogue AP using the Security Dashboard Which two actions should you take in responding to this event? (Select two)

Options:

There is no need to locale the AP If you manually contain It.

This is a serious security event, so you should always contain the AP immediately regardless of your company's specific policies.

You should receive permission before containing an AP. as this action could have legal Implications.

For forensic purposes, you should copy out logs with relevant information, such as the time mat the AP was detected and the AP's MAC address.

There is no need to locate the AP If the Aruba solution is properly configured to automatically contain it.

Answer:

C, DExplanation:

When responding to the detection of a Rogue AP, it's important to consider legal implications and to gather forensic evidence:

You should receive permission before containing an AP (Option C), as containing it could disrupt service and may have legal implications, especially if the AP is on a network that the organization does not own.

For forensic purposes, it is essential to document the event by copying out logs with relevant information, such as the time the AP was detected and the AP's MAC address (Option D). This information could be crucial if legal action is taken or if a detailed analysis of the security breach is required.

Automatically containing an AP without consideration for the context (Options A and E) can be problematic, as it might inadvertently interfere with neighboring networks and cause legal issues. Immediate containment without consideration of company policy (Option B) could also violate established incident response procedures.

What is a benefit of Opportunistic Wireless Encryption (OWE)?

Options:

It allows both WPA2-capable and WPA3-capable clients to authenticate to the same WPA-Personal WLAN.

It offers more control over who can connect to the wireless network when compared with WPA2-Personal.

It allows anyone to connect, but provides better protection against eavesdropping than a traditional open network.

It provides protection for wireless clients against both honeypot APs and man-in-the-middle (MITM) attacks.

Answer:

CExplanation:

Opportunistic Wireless Encryption (OWE) is a WPA3 feature designed for open wireless networks, where no password or authentication is required to connect. OWE enhances security by providing encryption for devices that support it, without requiring a pre-shared key (PSK) or 802.1X authentication.

Option C, "It allows anyone to connect, but provides better protection against eavesdropping than a traditional open network," is correct. In a traditional open network (no encryption), all traffic is sent in plaintext, making it vulnerable to eavesdropping. OWE allows anyone to connect (as it’s an open network), but it negotiates unique encryption keys for each client using a Diffie-Hellman key exchange. This ensures that client traffic is encrypted with AES (e.g., using AES-GCMP), protecting it from eavesdropping. OWE in transition mode also supports non-OWE devices, which connect without encryption, but OWE-capable devices benefit from the added security.

Option A, "It allows both WPA2-capable and WPA3-capable clients to authenticate to the same WPA-Personal WLAN," is incorrect. OWE is for open networks, not WPA-Personal (which uses a PSK). WPA2/WPA3 transition mode (not OWE) allows both WPA2 and WPA3 clients to connect to the same WPA-Personal WLAN.

Option B, "It offers more control over who can connect to the wireless network when compared with WPA2-Personal," is incorrect. OWE is an open network protocol, meaning it offers less control over who can connect compared to WPA2-Personal, which requires a PSK for access.

Option D, "It provides protection for wireless clients against both honeypot APs and man-in-the-middle (MITM) attacks," is incorrect. OWE provides encryption to prevent eavesdropping, but it does not protect against honeypot APs (rogue APs broadcasting the same SSID) or MITM attacks, as it lacks authentication mechanisms to verify the AP’s identity. Protection against such attacks requires 802.1X authentication (e.g., WPA3-Enterprise) or other security measures.

The HPE Aruba Networking AOS-8 8.11 User Guide states:

"Opportunistic Wireless Encryption (OWE) is a WPA3 feature for open networks that allows anyone to connect without a password, but provides better protection against eavesdropping than a traditional open network. OWE uses a Diffie-Hellman key exchange to negotiate unique encryption keys for each client, ensuring that traffic is encrypted with AES-GCMP and protected from unauthorized interception." (Page 290, OWE Overview Section)

Additionally, the HPE Aruba Networking Wireless Security Guide notes:

"OWE enhances security for open WLANs by providing encryption without requiring authentication. It allows any device to connect, but OWE-capable devices benefit from encrypted traffic, offering better protection against eavesdropping compared to a traditional open network where all traffic is sent in plaintext." (Page 35, OWE Benefits Section)

You have an Aruba Mobility Controller (MC) that is locked in a closet. What is another step that Aruba recommends to protect the MC from unauthorized access?

Options:

Use local authentication rather than external authentication to authenticate admins.

Change the password recovery password.

Set the local admin password to a long random value that is unknown or locked up securely.

Disable local authentication of administrators entirely.

Answer:

BExplanation:

Protecting an Aruba Mobility Controller from unauthorized access involves several layers of security. One recommendation is to change the password recovery password, which is a special type of password used to recover access to the device in the event the admin password is lost. Changing this to something complex and unique adds an additional layer of security in the event the physical security of the device is compromised.

What is a difference between passive and active endpoint classification?

Options:

Passive classification refers exclusively to MAC OUI-based classification, while active classification refers to any other classification method.

Passive classification classifies endpoints based on entries in dictionaries, while active classification uses admin-defined rules to classify endpoints.

Passive classification is only suitable for profiling endpoints in small business environments, while enterprises should use active classification exclusively.

Passive classification analyzes traffic that endpoints send as part of their normal functions; active classification involves sending requests to endpoints.

Answer:

DExplanation:

HPE Aruba Networking ClearPass Policy Manager (CPPM) uses endpoint classification (profiling) to identify and categorize devices on the network, enabling policy enforcement based on device type, OS, or other attributes. CPPM supports two primary profiling methods: passive and active classification.

Passive Classification: This method involves observing network traffic that endpoints send as part of their normal operation, without CPPM sending any requests to the device. Examples include DHCP fingerprinting (analyzing DHCP Option 55), HTTP User-Agent string analysis, and TCP fingerprinting (analyzing TTL and window size). Passive classification is non-intrusive and does not generate additional network traffic.

Active Classification: This method involves CPPM sending requests to the endpoint to gather information. Examples include SNMP scans (to query device details), WMI scans (for Windows devices), and SSH scans (to gather system information). Active classification is more intrusive and may require credentials or network access to the device.

Option A, "Passive classification refers exclusively to MAC OUI-based classification, while active classification refers to any other classification method," is incorrect. Passive classification includes more than just MAC OUI-based classification (e.g., DHCP fingerprinting, TCP fingerprinting). MAC OUI (Organizationally Unique Identifier) analysis is one passive method, but not the only one. Active classification specifically involves sending requests, not just "any other method."

Option B, "Passive classification classifies endpoints based on entries in dictionaries, while active classification uses admin-defined rules to classify endpoints," is incorrect. Both passive and active classification use CPPM’s fingerprint database (not "dictionaries") to match device attributes. Admin-defined rules are used for policy enforcement, not classification, and apply to both methods.

Option C, "Passive classification is only suitable for profiling endpoints in small business environments, while enterprises should use active classification exclusively," is incorrect. Passive classification is widely used in enterprises because it is non-intrusive and scalable. Active classification is often used in conjunction with passive methods to gather more detailed information, but enterprises do not use it exclusively.

Option D, "Passive classification analyzes traffic that endpoints send as part of their normal functions; active classification involves sending requests to endpoints," is correct. This accurately describes the fundamental difference between the two methods: passive classification observes existing traffic, while active classification actively queries the device.

The HPE Aruba Networking ClearPass Policy Manager 6.11 User Guide states:

"Passive classification analyzes traffic that endpoints send as part of their normal functions, such as DHCP requests, HTTP traffic, or TCP packets, without ClearPass sending any requests to the device. Examples include DHCP fingerprinting and TCP fingerprinting. Active classification involves ClearPass sending requests to the endpoint to gather information, such as SNMP scans, WMI scans, or SSH scans, which may require credentials or network access." (Page 246, Passive vs. Active Profiling Section)

Additionally, the ClearPass Device Insight Data Sheet notes:

"Passive classification observes network traffic generated by endpoints during normal operation, such as DHCP or HTTP traffic, to identify devices without generating additional traffic. Active classification, in contrast, sends requests to endpoints (e.g., SNMP or WMI scans) to gather detailed information, which can be more intrusive but provides deeper insights." (Page 3, Profiling Methods Section)

Refer to the exhibit.

Device A is establishing an HTTPS session with the Arubapedia web sue using Chrome. The Arubapedia web server sends the certificate shown in the exhibit

What does the browser do as part of vacating the web server certificate?

Options:

It uses the public key in the DigCen SHA2 Secure Server CA certificate to check the certificate's signature.

It uses the public key in the DigCert root CA certificate to check the certificate signature

It uses the private key in the DigiCert SHA2 Secure Server CA to check the certificate's signature.

It uses the private key in the Arubapedia web site's certificate to check that certificate's signature

Answer:

AExplanation:

When a browser, like Chrome, is validating a web server's certificate, it uses the public key in the certificate's signing authority to verify the certificate's digital signature. In the case of the exhibit, the browser would use the public key in the DigiCert SHA2 Secure Server CA certificate to check the signature of the Arubapedia web server's certificate. This process ensures that the certificate was indeed issued by the claimed Certificate Authority (CA) and has not been tampered with.

What is a difference between radius and TACACS+?

Options:

RADIUS combines the authentication and authorization process while TACACS+ separates them.

RADIUS uses TCP for Its connection protocol, while TACACS+ uses UDP tor its connection protocol.

RADIUS encrypts the complete packet, white TACACS+ only offers partial encryption.

RADIUS uses Attribute Value Pairs (AVPs) in its messages, while TACACS+ does not use them.

Answer:

AExplanation:

RADIUS and TACACS+ are both protocols used for networking authentication, but they handle the processes of authentication and authorization differently. RADIUS (Remote Authentication Dial-In User Service) combines authentication and authorization into a single process, whereas TACACS+ (Terminal Access Controller Access-Control System Plus) separates these processes. This separation in TACACS+ allows more flexible policy enforcement and better control over commands a user can execute. This difference is well-documented in various network security resources, including Cisco's technical documentation and security protocol manuals.

What is one thing can you determine from the exhibits?

Options:

CPPM originally assigned the client to a role for non-profiled devices. It sent a CoA to the authenticator after it categorized the device.

CPPM sent a CoA message to the client to prompt the client to submit information that CPPM can use to profile it.

CPPM was never able to determine a device category for this device, so you need to check settings in the network infrastructure to ensure they support CPPM's endpoint classification.

CPPM first assigned the client to a role based on the user's identity. Then, it discovered that the client had an invalid category, so it sent a CoA to blacklist the client.

Answer:

AExplanation:

Based on the exhibits which seem to show RADIUS authentication and CoA logs, one can determine that CPPM (ClearPass Policy Manager) initially assigned the client to a role meant for non-profiled devices and then sent a CoA to the network access device (authenticator) once the device was categorized. This is a common workflow in network access control, where a device is first given limited access until it can be properly identified, after which appropriate access policies are applied.

What correctly describes the Pairwise Master Key (PMK) in thee specified wireless security protocol?

Options:

In WPA3-Enterprise, the PMK is unique per session and derived using Simultaneous Authentication of Equals.

In WPA3-Personal, the PMK is unique per session and derived using Simultaneous Authentication of Equals.

In WPA3-Personal, the PMK is derived directly from the passphrase and is the same tor every session.

In WPA3-Personal, the PMK is the same for each session and is communicated to clients that authenticate

Answer:

AExplanation:

In WPA3-Enterprise, the Pairwise Master Key (PMK) is indeed unique for each session and is derived using a process called Simultaneous Authentication of Equals (SAE). SAE is a new handshake protocol available in WPA3 that provides better security than the Pre-Shared Key (PSK) used in WPA2. This handshake process strengthens user privacy in open networks and provides forward secrecy. The information on SAE and its use in generating a unique PMK can be found in the Wi-Fi Alliance's WPA3 specifications and related technical documentation.

What is a benefit or using network aliases in ArubaOS firewall policies?

Options:

You can associate a reputation score with the network alias to create rules that filler traffic based on reputation rather than IP.

You can use the aliases to translate client IP addresses to other IP addresses on the other side of the firewall

You can adjust the IP addresses in the aliases, and the rules using those aliases automatically update

You can use the aliases to conceal the true IP addresses of servers from potentially untrusted clients.

Answer:

CExplanation:

In ArubaOS firewall policies, using network aliases allows administrators to manage groups of IP addresses more efficiently. By associating multiple IPs with a single alias, any changes made to the alias (like adding or removing IP addresses) are automatically reflected in all firewall rules that reference that alias. This significantly simplifies the management of complex rulesets and ensures consistency across security policies, reducing administrative overhead and minimizing the risk of errors.

What is the purpose of an Enrollment over Secure Transport (EST) server?

Options:

It acts as an intermediate Certification Authority (CA) that signs end-entity certificates.

It helps admins to avoid expired certificates with less management effort.

It provides a secure central repository for private keys associated with devices' digital certif-icates.

It provides a more secure alternative to private CAs at less cost than a public CA.

Answer:

BExplanation:

EST (Enrollment over Secure Transport) is a protocol designed to streamline the certificate management process. It enables automated and secure enrollment, renewal, and revocation of digital certificates, which significantly reduces the management overhead typically associated with digital certificates. With EST, administrators can more easily manage certificates' lifecycle, ensuring that expired certificates are promptly replaced or renewed without significant manual intervention.

You are managing an Aruba Mobility Controller (MC). What is a reason for adding a "Log Settings" definition in the ArubaOS Diagnostics > System > Log Settings page?

Options:

Configuring the Syslog server settings for the server to which the MC forwards logs for a particular category and level

Configuring the MC to generate logs for a particular event category and level, but only for a specific user or AP.

Configuring a filter that you can apply to a defined Syslog server in order to filter events by subcategory

Configuring the log facility and log format that the MC will use for forwarding logs to all Syslog servers

Answer:

AExplanation:

The primary reason for adding a "Log Settings" definition in the ArubaOS Diagnostics > System > Log Settings page is to configure the Syslog server settings for the server to which the Mobility Controller (MC) forwards logs for a particular category and level. This setting enables the MC to send detailed logs to a Syslog server for centralized logging and monitoring, which is essential for troubleshooting, security analysis, and compliance with various policies.

You have an Aruba solution with multiple Mobility Controllers (MCs) and campus APs. You want to deploy a WPA3-Enterprise WLAN and authenticate users to Aruba ClearPass Policy Manager (CPPM) with EAP-TLS.

What is a guideline for ensuring a successful deployment?

Options:

Avoid enabling CNSA mode on the WLAN, which requires the internal MC RADIUS server.

Ensure that clients trust the root CA for the MCs’ Server Certificates.

Educate users in selecting strong passwords with at least 8 characters.

Deploy certificates to clients, signed by a CA that CPPM trusts.

Answer:

DExplanation:

For WPA3-Enterprise with EAP-TLS, it's crucial that clients have a trusted certificate installed for the authentication process. EAP-TLS relies on a mutual exchange of certificates for authentication. Deploying client certificates signed by a CA that CPPM trusts ensures that the ClearPass Policy Manager can verify the authenticity of the client certificates during the TLS handshake process. Trust in the root CA is typically required for the server side of the authentication process, not the client side, which is covered by the client’s own certificate.

From which solution can ClearPass Policy Manager (CPPM) receive detailed information about client device type OS and status?

Options:

ClearPass Onboard

ClearPass Access Tracker

ClearPass OnGuard

ClearPass Guest

Answer:

CExplanation:

ClearPass Policy Manager (CPPM) can receive detailed information about client device type, OS, and status from ClearPass OnGuard. ClearPass OnGuard is part of the ClearPass suite and provides posture assessment and endpoint health checks. It gathers detailed information on the status and security posture of devices trying to connect to the network, such as whether antivirus software is up to date, which operating system is running, and other details that characterize the device's compliance with the network's security policies.

Which is a correct description of a stage in the Lockheed Martin kill chain?

Options:

In the delivery stage, malware collects valuable data and delivers or exfilltrated it to the hacker.

In the reconnaissance stage, the hacker assesses the impact of the attack and how much information was exfilltrated.

In the weaponization stage, which occurs after malware has been delivered to a system, the malware executes Its function.

In the exploitation and installation phases, malware creates a backdoor into the infected system for the hacker.

Answer:

DExplanation:

The Lockheed Martin Cyber Kill Chain model describes the stages of a cyber attack. In the exploitation phase, the attacker uses vulnerabilities to gain access to the system. Following this, in the installation phase, the attacker installs a backdoor or other malicious software to ensure persistent access to the compromised system. This backdoor can then be used to control the system, steal data, or execute additional attacks.

You need to deploy an Aruba instant AP where users can physically reach It. What are two recommended options for enhancing security for management access to the AP? (Select two )

Options:

Disable Its console ports

Place a Tamper Evident Label (TELS) over its console port

Disable the Web Ul.

Configure WPA3-Enterpnse security on the AP

install a CA-signed certificate

Answer:

C, EExplanation:

When deploying an Aruba Instant AP in a location where users can physically access it, enhancing security for management access could involve several measures: C. Disabling the Web UI will prevent unauthorized access via the browser-based management interface, which could be a security risk if the AP is within physical reach of untrusted parties. E. Installing a CA-signed certificate helps ensure that any communication with the AP's management interface is encrypted and authenticated, preventing man-in-the-middle attacks and eavesdropping.

You are checking the Security Dashboard in the Web UI for your AOS solution and see that Wireless Intrusion Prevention (WIP) has discovered a rogue radio operating in ad hoc mode with open security. What correctly describes a threat that the radio could pose?

Options:

It could be attempting to conceal itself from detection by changing its BSSID and SSID frequently.

It could open a backdoor into the corporate LAN for unauthorized users.

It is running in a non-standard 802.11 mode and could effectively jam the wireless signal.

It is flooding the air with many wireless frames in a likely attempt at a DoS attack.

Answer:

BExplanation:

The AOS Security Dashboard in an AOS-8 solution (Mobility Controllers or Mobility Master) provides visibility into wireless threats detected by the Wireless Intrusion Prevention (WIP) system. The scenario describes a rogue radio operating in ad hoc mode with open security. Ad hoc mode in 802.11 allows devices to communicate directly with each other without an access point (AP), forming a peer-to-peer network. Open security means no encryption or authentication is required to connect.

Ad Hoc Mode Threat: An ad hoc network created by a rogue device can pose significant risks, especially if a corporate client connects to it. Since ad hoc mode allows direct device-to-device communication, a client that joins the ad hoc network might inadvertently bridge the corporate LAN to the rogue network, especially if the client is also connected to the corporate network (e.g., via a wired connection or another wireless interface).

Option B, "It could open a backdoor into the corporate LAN for unauthorized users," is correct. If a corporate client connects to the rogue ad hoc network (e.g., due to a misconfiguration or auto-connect setting), the client might bridge the ad hoc network to the corporate LAN, allowing unauthorized users on the ad hoc network to access corporate resources. This is a common threat with ad hoc networks, as they bypass the security controls of the corporate AP infrastructure.

Option A, "It could be attempting to conceal itself from detection by changing its BSSID and SSID frequently," is incorrect. While changing BSSID and SSID can be a tactic to evade detection, this is not a typical characteristic of ad hoc networks and is not implied by the scenario. Ad hoc networks are generally visible to WIP unless explicitly hidden.

Option C, "It is running in a non-standard 802.11 mode and could effectively jam the wireless signal," is incorrect. Ad hoc mode is a standard 802.11 mode, not a non-standard one. While a rogue device could potentially jam the wireless signal, this is not a direct threat posed by ad hoc mode with open security.

Option D, "It is flooding the air with many wireless frames in a likely attempt at a DoS attack," is incorrect. There is no indication in the scenario that the rogue radio is flooding the air with frames. While ad hoc networks can be used in DoS attacks, the primary threat in this context is the potential for unauthorized access to the corporate LAN.

The HPE Aruba Networking AOS-8 8.11 User Guide states:

"A rogue radio operating in ad hoc mode with open security poses a significant threat, as it can open a backdoor into the corporate LAN. If a corporate client connects to the ad hoc network, it may bridge the ad hoc network to the corporate LAN, allowing unauthorized users to access corporate resources. This is particularly dangerous if the client is also connected to the corporate network via another interface." (Page 422, Wireless Threats Section)

Additionally, the HPE Aruba Networking Security Guide notes:

"Ad hoc networks detected by WIP are a concern because they can act as a backdoor into the corporate LAN. A client that joins an ad hoc network with open security may inadvertently allow unauthorized users to access the corporate network, bypassing the security controls of authorized APs." (Page 73, Ad Hoc Network Threats Section)

What is a guideline for deploying Aruba ClearPass Device Insight?

Options:

Deploy a Device Insight Collector at every site in the corporate WAN to reduce the impact on WAN links.

Make sure that Aruba devices trust the root CA certificate for the ClearPass Device Insight Analyzer's HTTPS certificate.

Configure remote mirroring on access layer Aruba switches, using Device Insight Analyzer as the destination IP.

For companies with multiple sites, deploy a pair of Device Insight Collectors at the HQ or the central data center.

Answer:

DExplanation:

For deploying Aruba ClearPass Device Insight effectively, especially in environments with multiple sites, it is recommended to deploy a pair of Device Insight Collectors at the headquarters or the central data center. This deployment strategy helps in centralizing the data collection and analysis, which simplifies management and enhances performance by reducing the data load on the WAN links connecting different sites. Centralizing the collectors at a major site or data center allows for better scalability and reliability of the network management system. This configuration also aids in achieving a more consistent and comprehensive monitoring and analysis of the devices across the network, ensuring that the security and management policies are uniformly applied. This recommendation is based on best practices for network architecture design, particularly those discussed in Aruba’s deployment guides and network management strategies.

The monitoring admin has asked you to set up an AOS-CX switch to meet these criteria:

Send logs to a SIEM Syslog server at 10.4.13.15 at the standard TCP port (514)

Send a log for all events at the "warning" level or above; do not send logs with a lower level than "warning"The switch did not have any "logging" configuration on it. You then entered this command:AOS-CX(config)# logging 10.4.13.15 tcp vrf defaultWhat should you do to finish configuring to the requirements?

Options:

Specify the "warning" severity level for the logging server.

Add logging categories at the global level.

Ask for the Syslog password and configure it on the switch.

Configure logging as a debug destination.

Answer:

AExplanation:

The task is to configure an AOS-CX switch to send logs to a SIEM Syslog server at IP address 10.4.13.15 using TCP port 514, with logs for events at the "warning" severity level or above (i.e., warning, error, critical, alert, emergency). The initial command entered is:

AOS-CX(config)# logging 10.4.13.15 tcp vrf default

This command configures the switch to send logs to the Syslog server at 10.4.13.15 using TCP (port 514 is the default for TCP Syslog unless specified otherwise) and the default VRF. However, this command alone does not specify the severity level of the logs to be sent, which is a requirement of the task.

Severity Level Configuration: AOS-CX switches allow you to specify the severity level for logs sent to a Syslog server. The severity levels, in increasing order of severity, are: debug, informational, notice, warning, error, critical, alert, and emergency. The requirement is to send logs at the "warning" level or above, meaning warning, error, critical, alert, and emergency logs should be sent, but debug, informational, and notice logs should not.

Option A, "Specify the ‘warning’ severity level for the logging server," is correct. To meet the requirement, you need to add the severity level to the logging configuration for the specific Syslog server. The command to do this is:

AOS-CX(config)# logging 10.4.13.15 severity warning

This command ensures that only logs with a severity of warning or higher are sent to the Syslog server at 10.4.13.15. Since the initial command already specified TCP and the default VRF, this additional command completes the configuration.

Option B, "Add logging categories at the global level," is incorrect. Logging categories (e.g., system, security, network) are used to filter logs based on the type of event, not the severity level. The requirement is about severity ("warning" or above), not specific categories, so this step is not necessary to meet the stated criteria.

Option C, "Ask for the Syslog password and configure it on the switch," is incorrect. Syslog servers typically do not require a password for receiving logs, and AOS-CX switches do not have a configuration option to specify a Syslog password. Authentication or encryption for Syslog (e.g., using TLS) is not mentioned in the requirements.

Option D, "Configure logging as a debug destination," is incorrect. Configuring a debug destination (e.g., using the debug command) is used to send debug-level logs to a destination (e.g., console, buffer, or Syslog), but the requirement is to send logs at the "warning" level or above, not debug-level logs. Additionally, the logging command already specifies the Syslog server as the destination.

The HPE Aruba Networking AOS-CX 10.12 System Management Guide states:

"To configure a Syslog server on an AOS-CX switch, use the logging

Additionally, the guide notes:

"Severity levels for logging on AOS-CX switches are, in increasing order: debug, informational, notice, warning, error, critical, alert, emergency. Specifying a severity level of ‘warning’ ensures that only logs at that level or higher are sent to the configured destination." (Page 90, Logging Severity Levels Section)

You have configured a WLAN to use Enterprise security with the WPA3 version.

How does the WLAN handle encryption?

Options:

Traffic is encrypted with TKIP and keys derived from a PMK shared by all clients on the WLAN.

Traffic is encrypted with TKIP and keys derived from a unique PMK per client.

Traffic is encrypted with AES and keys derived from a PMK shared by all clients on the WLAN.

Traffic is encrypted with AES and keys derived from a unique PMK per client.

Answer:

DExplanation:

WPA3-Enterprise is a security protocol introduced to enhance the security of wireless networks, particularly in enterprise environments. It builds on the foundation of WPA2 but introduces stronger encryption and key management practices. In WPA3-Enterprise, authentication is typically performed using 802.1X, and encryption is handled using the Advanced Encryption Standard (AES).

WPA3-Enterprise Encryption: WPA3-Enterprise uses AES with the Galois/Counter Mode Protocol (GCMP) or Cipher Block Chaining Message Authentication Code Protocol (CCMP), both of which are AES-based encryption methods. WPA3 does not use TKIP (Temporal Key Integrity Protocol), which is a legacy encryption method used in WPA and early WPA2 deployments and is considered insecure.

Pairwise Master Key (PMK): In WPA3-Enterprise, the PMK is derived during the 802.1X authentication process (e.g., via EAP-TLS or EAP-TTLS). Each client authenticates individually with the authentication server (e.g., ClearPass), resulting in a unique PMK for each client. This PMK is then used to derive session keys (Pairwise Transient Keys, PTKs) for encrypting the client’s traffic, ensuring that each client’s traffic is encrypted with unique keys.

Option A, "Traffic is encrypted with TKIP and keys derived from a PMK shared by all clients on the WLAN," is incorrect because WPA3 does not use TKIP (it uses AES), and the PMK is not shared among clients in WPA3-Enterprise; each client has a unique PMK.

Option B, "Traffic is encrypted with TKIP and keys derived from a unique PMK per client," is incorrect because WPA3 does not use TKIP; it uses AES.

Option C, "Traffic is encrypted with AES and keys derived from a PMK shared by all clients on the WLAN," is incorrect because, in WPA3-Enterprise, the PMK is unique per client, not shared.

Option D, "Traffic is encrypted with AES and keys derived from a unique PMK per client," is correct. WPA3-Enterprise uses AES for encryption, and each client derives a unique PMK during 802.1X authentication, which is used to generate unique session keys for encryption.

The HPE Aruba Networking AOS-8 8.11 User Guide states:

"WPA3-Enterprise enhances security by using AES encryption with GCMP or CCMP. In WPA3-Enterprise mode, each client authenticates via 802.1X, resulting in a unique Pairwise Master Key (PMK) for each client. The PMK is used to derive session keys (Pairwise Transient Keys, PTKs) that encrypt the client’s traffic with AES, ensuring that each client’s traffic is protected with unique keys. WPA3 does not support TKIP, which is a legacy encryption method." (Page 285, WPA3-Enterprise Security Section)

Additionally, the HPE Aruba Networking Wireless Security Guide notes:

"WPA3-Enterprise requires 802.1X authentication, which generates a unique PMK for each client. This PMK is used to derive AES-based session keys, providing individualized encryption for each client’s traffic and eliminating the risks associated with shared keys." (Page 32, WPA3 Security Features Section)

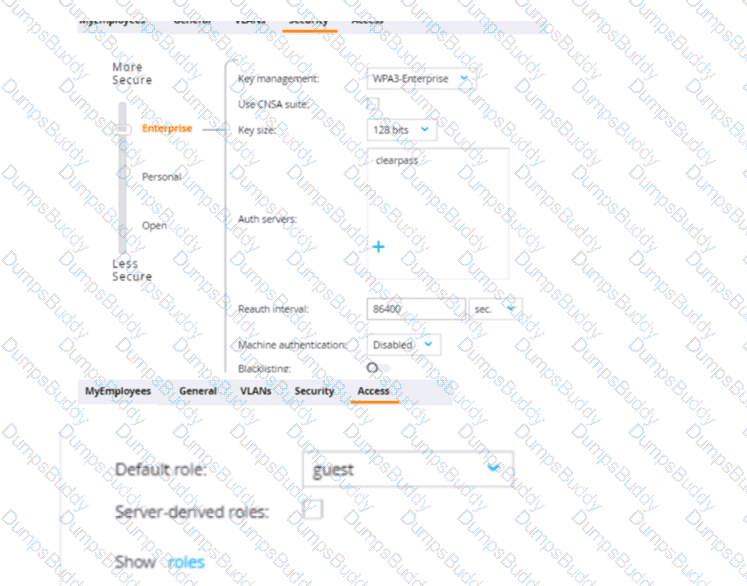

The first exhibit shows roles on the MC, listed in alphabetic order. The second and third exhibits show the configuration for a WLAN to which a client connects. Which description of the role assigned to a user under various circumstances is correct?

Options:

A user fails 802.1X authentication. The client remains connected, but is assigned the "guest" role.

A user authenticates successfully with 802.1 X. and the RADIUS Access-Accept includes an Aruba-User-Role VSA set to "employeel.” The client’s role is "guest."

A user authenticates successfully with 802.1X. and the RADIUS Access-Accept includes an Aruba-User-Role VSA set to "employee." The client’s role is "guest."

A user authenticates successfully with 802.1X, and the RADIUS Access-Accept includes an Aruba-User-RoleVSA set to "employeel." The client's role is "employeel."

Answer:

DExplanation:

In a WLAN setup that uses 802.1X for authentication, the role assigned to a user is determined by the result of the authentication process. When a user successfully authenticates via 802.1X, the RADIUS server may include a Vendor-Specific Attribute (VSA), such as the Aruba-User-Role, in the Access-Accept message. This attribute specifies the role that should be assigned to the user. If the RADIUS Access-Accept message includes an Aruba-User-Role VSA set to "employee1", the client should be assigned the "employee1" role, as per the VSA, and not the default "guest" role. The "guest" role would typically be a fallback if no other role is specified or if the authentication fails.

You are troubleshooting an authentication issue for Aruba switches that enforce 802 IX10 a cluster of Aruba ClearPass Policy Manager (CPPMs) You know that CPPM Is receiving and processing the authentication requests because the Aruba switches are showing Access-Rejects in their statistics However, you cannot find the record tor the Access-Rejects in CPPM Access Tracker

What is something you can do to look for the records?

Options:

Make sure that CPPM cluster settings are configured to show Access-Rejects

Verify that you are logged in to the CPPM Ul with read-write, not read-only, access

Click Edit in Access viewer and make sure that the correct servers are selected.

Go to the CPPM Event Viewer, because this is where RADIUS Access Rejects are stored.

Answer:

AExplanation:

If Access-Reject records are not showing up in the CPPM Access Tracker, one action you can take is to ensure that the CPPM cluster settings are configured to display Access-Rejects. Cluster-wide settings in CPPM can affect which records are visible in Access Tracker. Ensuring that these settings are correctly configured will allow you to view all relevant authentication records, including Access-Rejects.

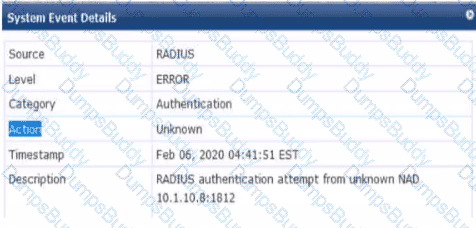

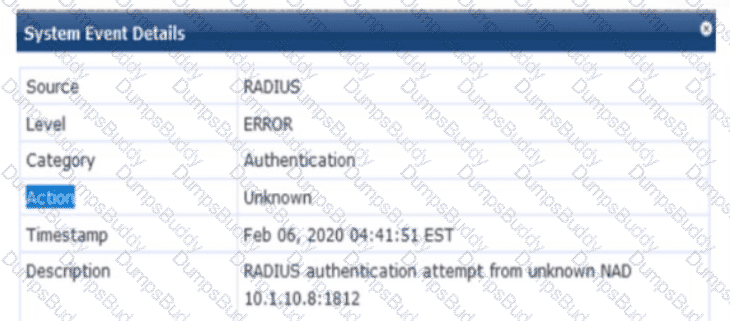

Refer to the exhibit.

You are deploying a new ArubaOS Mobility Controller (MC), which is enforcing authentication to Aruba ClearPass Policy Manager (CPPM). The authentication is not working correctly, and you find the error shown In the exhibit in the CPPM Event Viewer.

What should you check?

Options:

that the MC has been added as a domain machine on the Active Directory domain with which CPPM is synchronized

that the snared secret configured for the CPPM authentication server matches the one defined for the device on CPPM

that the IP address that the MC is using to reach CPPM matches the one defined for the device on CPPM

that the MC has valid admin credentials configured on it for logging into the CPPM

Answer:

CExplanation:

Given the error message from the ClearPass Policy Manager (CPPM) Event Viewer, indicating a RADIUS authentication attempt from an unknown Network Access Device (NAD), you should check that the IP address the Mobility Controller (MC) is using to communicate with CPPM matches the IP address defined for the MC in the CPPM's device inventory. If there is a mismatch in IP addresses, CPPM will not recognize the MC as a known device and will not process the authentication request, leading to the error observed.

You have been asked to send RADIUS debug messages from an AOS-CX switch to a central SIEM server at 10.5.15.6. The server is already defined on the switch with this command:

logging 10.5.15.6

You enter this command:

debug radius all

What is the correct debug destination?

Options:

file

console

buffer

syslog

Answer:

DExplanation:

The scenario involves an AOS-CX switch that needs to send RADIUS debug messages to a central SIEM server at 10.5.15.6. The switch has already been configured to send logs to the SIEM server with the command logging 10.5.15.6, and the command debug radius all has been entered to enable RADIUS debugging.

Debug Command: The debug radius all command enables debugging for all RADIUS-related events on the AOS-CX switch, generating detailed debug messages for RADIUS authentication, accounting, and other operations.

Debug Destination: Debug messages on AOS-CX switches can be sent to various destinations, such as the console, a file, the debug buffer, or a Syslog server. The logging 10.5.15.6 command indicates that the switch is configured to send logs to a Syslog server at 10.5.15.6 (using UDP port 514 by default, unless specified otherwise).

Option D, "syslog," is correct. To send RADIUS debug messages to the SIEM server, the debug destination must be set to "syslog," as the SIEM server is already defined as a Syslog destination with logging 10.5.15.6. The command to set the debug destination to Syslog is debug destination syslog, which ensures that the RADIUS debug messages are sent to the configured Syslog server (10.5.15.6).

Option A, "file," is incorrect. Sending debug messages to a file (e.g., using debug destination file) stores the messages on the switch’s filesystem, not on the SIEM server.

Option B, "console," is incorrect. Sending debug messages to the console (e.g., using debug destination console) displays them on the switch’s console session, not on the SIEM server.

Option C, "buffer," is incorrect. Sending debug messages to the buffer (e.g., using debug destination buffer) stores them in the switch’s debug buffer, which can be viewed with show debug buffer, but does not send them to the SIEM server.

The HPE Aruba Networking AOS-CX 10.12 System Management Guide states:

"To send debug messages, such as RADIUS debug messages, to a central SIEM server, first configure the Syslog server with the logging

Additionally, the HPE Aruba Networking AOS-CX 10.12 Security Guide notes:

"RADIUS debug messages can be sent to a Syslog server for centralized monitoring. After enabling RADIUS debugging with debug radius all, use debug destination syslog to send the messages to the Syslog server configured with the logging command, such as a SIEM server at 10.5.15.6." (Page 152, RADIUS Debugging Section)

What is a guideline for managing local certificates on an ArubaOS-Switch?

Options:

Before installing the local certificate, create a trust anchor (TA) profile with the root CA certificate for the certificate that you will install

Install an Online Certificate Status Protocol (OCSP) certificate to simplify the process of enrolling and re-enrolling for certificate

Generate the certificate signing request (CSR) with a program offline, then, install both the certificate and the private key on the switch in a single file.

Create a self-signed certificate online on the switch because ArubaOS-Switches do not support CA-signed certificates.

Answer:

AExplanation:

When managing local certificates on an ArubaOS-Switch, a recommended guideline is to create a trust anchor (TA) profile with the root CA certificate before installing the local certificate. This step ensures that the switch can verify the authenticity of the certificate chain during SSL/TLS communications. The trust anchor profile establishes a basis of trust by containing the root CA certificate, which helps validate the authenticity of any subordinate certificates, including the local certificate installed on the switch. This process is essential for enhancing security on the network, as it ensures that encrypted communications involving the switch are based on a verified certificate hierarchy.

An AOS-CX switch currently has no device fingerprinting settings configured on it. You want the switch to start collecting DHCP and LLDP information. You enter these commands:

Switch(config)# client device-fingerprint profile myprofile

Switch(myprofile)# dhcp

Switch(myprofile)# lldp

What else must you do to allow the switch to collect information from clients?

Options:

Configure the switch as a DHCP relay

Add at least one LLDP option to the policy

Apply the policy to edge ports

Add at least one DHCP option to the policy

Answer:

CExplanation:

Device fingerprinting on an AOS-CX switch allows the switch to collect information about connected clients to aid in profiling and policy enforcement, often in conjunction with a solution like ClearPass Policy Manager (CPPM). The commands provided create a device fingerprinting profile named "myprofile" and enable the collection of DHCP and LLDP information:

client device-fingerprint profile myprofile: Creates a fingerprinting profile.

dhcp: Enables the collection of DHCP information (e.g., DHCP options like Option 55 for fingerprinting).

lldp: Enables the collection of LLDP (Link Layer Discovery Protocol) information (e.g., system name, description).

However, creating the profile and enabling DHCP and LLDP collection is not enough for the switch to start collecting this information from clients. The profile must be applied to the interfaces (ports) where clients are connected.

Option C, "Apply the policy to edge ports," is correct. In AOS-CX, the device fingerprinting profile must be applied to the edge ports (ports where clients connect) to enable the switch to collect DHCP and LLDP information from those clients. This is done using the command client device-fingerprint profile

text

CollapseWrapCopy

Switch(config)# interface 1/1/1

Switch(config-if)# client device-fingerprint profile myprofile

This ensures that the switch collects DHCP and LLDP data from clients connected to the specified ports.

Option A, "Configure the switch as a DHCP relay," is incorrect. While a DHCP relay (using the ip helper-address command) is needed if the DHCP server is on a different subnet, it is not a requirement for the switch to collect DHCP information for fingerprinting. The switch can snoop DHCP traffic on the local VLAN without being a relay, as long as the profile is applied to the ports.

Option B, "Add at least one LLDP option to the policy," is incorrect. The lldp command in the fingerprinting profile already enables the collection of LLDP information. There is no need to specify individual LLDP options (e.g., system name, description) in the profile; the switch collects all available LLDP data by default.

Option D, "Add at least one DHCP option to the policy," is incorrect. The dhcp command in the fingerprinting profile already enables the collection of DHCP information, including options like Option 55 (Parameter Request List), which is commonly used for fingerprinting. There is no need to specify individual DHCP options in the profile.

The HPE Aruba Networking AOS-CX 10.12 Security Guide states:

"To enable device fingerprinting on an AOS-CX switch, create a device fingerprinting profile using the client device-fingerprint profile

Additionally, the HPE Aruba Networking AOS-CX 10.12 System Management Guide notes:

"The device fingerprinting profile must be applied to the ports where clients are connected to collect DHCP and LLDP information. The dhcp and lldp commands in the profile enable the collection of all relevant data for those protocols, such as DHCP Option 55 for fingerprinting, without requiring additional options to be specified." (Page 95, Device Fingerprinting Setup Section)

What is social engineering?

Options:

Hackers use Artificial Intelligence (Al) to mimic a user’s online behavior so they can infiltrate a network and launch an attack.

Hackers use employees to circumvent network security and gather the information they need to launch an attack.

Hackers intercept traffic between two users, eavesdrop on their messages, and pretend to be one or both users.

Hackers spoof the source IP address in their communications so they appear to be a legitimate user.

Answer:

BExplanation:

Social engineering in the context of network security refers to the techniques used by hackers to manipulate individuals into breaking normal security procedures and best practices to gain unauthorized access to systems, networks, or physical locations, or for financial gain. Hackers use various forms of deception to trick employees into handing over confidential or personal information that can be used for fraudulent purposes. This definition encompasses phishing attacks, pretexting, baiting, and other manipulative techniques designed to exploit human psychology. Unlike other hacking methods that rely on technical means, social engineering targets the human element of security. References to social engineering, its methods, and defense strategies are commonly found in security training manuals, cybersecurity awareness programs, and authoritative resources like those from the SANS Institute or cybersecurity agencies.

Which is a correct description of a stage in the Lockheed Martin kill chain?

Options:

In the weaponization stage, which occurs after malware has been delivered to a system, the malware executes its function.

In the exploitation and installation phases, malware creates a backdoor into the infected system for the hacker.

In the reconnaissance stage, the hacker assesses the impact of the attack and how much information was exfiltrated.

In the delivery stage, malware collects valuable data and delivers or exfiltrates it to the hacker.

Answer:

BExplanation:

The Lockheed Martin Cyber Kill Chain is a framework that outlines the stages of a cyber attack, from initial reconnaissance to achieving the attacker’s objective. It is often referenced in HPE Aruba Networking security documentation to help organizations understand and mitigate threats. The stages are: Reconnaissance, Weaponization, Delivery, Exploitation, Installation, Command and Control (C2), and Actions on Objectives.

Option A, "In the weaponization stage, which occurs after malware has been delivered to a system, the malware executes its function," is incorrect. The weaponization stage occurs before delivery, not after. In this stage, the attacker creates a deliverable payload (e.g., combining malware with an exploit). The execution of the malware happens in the exploitation stage, not weaponization.

Option B, "In the exploitation and installation phases, malware creates a backdoor into the infected system for the hacker," is correct. The exploitation phase involves the attacker exploiting a vulnerability (e.g., a software flaw) to execute the malware on the target system. The installation phase follows, where the malware installs itself to establish persistence, often by creating a backdoor (e.g., a remote access tool) to allow the hacker to maintain access to the system. These two phases are often linked in the kill chain as the malware gains a foothold and ensures continued access.

Option C, "In the reconnaissance stage, the hacker assesses the impact of the attack and how much information was exfiltrated," is incorrect. The reconnaissance stage occurs at the beginning of the kill chain, where the attacker gathers information about the target (e.g., network topology, vulnerabilities). Assessing the impact and exfiltration occurs in the Actions on Objectives stage, the final stage of the kill chain.

Option D, "In the delivery stage, malware collects valuable data and delivers or exfiltrates it to the hacker," is incorrect. The delivery stage involves the attacker transmitting the weaponized payload to the target (e.g., via a phishing email). Data collection and exfiltration occur later, in the Actions on Objectives stage, not during delivery.

The HPE Aruba Networking Security Guide states:

"The Lockheed Martin Cyber Kill Chain outlines the stages of a cyber attack. In the exploitation phase, the attacker exploits a vulnerability to execute the malware on the target system. In the installation phase, the malware creates a backdoor or other persistence mechanism, such as a remote access tool, to allow the hacker to maintain access to the infected system for future actions." (Page 18, Cyber Kill Chain Overview Section)

Additionally, the HPE Aruba Networking AOS-8 8.11 User Guide notes:

"The exploitation and installation phases of the Lockheed Martin kill chain involve the malware gaining a foothold on the target system. During exploitation, the malware is executed by exploiting a vulnerability, and during installation, it creates a backdoor to ensure persistent access for the hacker, enabling further stages like command and control." (Page 420, Threat Mitigation Section)

Your Aruba Mobility Master-based solution has detected a suspected rogue AP. Among other information, the ArubaOS Detected Radios page lists this information for the AP:

SSID = PublicWiFi

BSSID = a8:bd:27:12:34:56

Match method = Plus one

Match method = Eth-Wired-Mac-Table

The security team asks you to explain why this AP is classified as a rogue. What should you explain?

Options:

The AP has a BSSID that is close to your authorized APs' BSSIDs. This indicates that the AP might be spoofing the corporate SSID and attempting to lure clients to it, making the AP a suspected rogue.

The AP is probably connected to your LAN because it has a BSSID that is close to a MAC address that has been detected in your LAN. Because it does not belong to the company, it is a suspected rogue.

The AP has been detected using multiple MAC addresses. This indicates that the AP is spoofing its MAC address, which qualifies it as a suspected rogue.

The AP is an AP that belongs to your solution. However, the ArubaOS has detected that it is behaving suspiciously. It might have been compromised, so it is classified as a suspected rogue.

Answer:

BExplanation:

The Match method 'Eth-Wired-Mac-Table' suggests that the BSSID of the rogue AP has been found in the Ethernet (wired) MAC address table of the network infrastructure. This means the AP is physically connected to the LAN. If the BSSID does not match the company's authorized APs, it implies the AP is unauthorized and hence classified as a rogue.

Which is a correct description of a Public Key Infrastructure (PKI)?

Options:

A device uses Intermediate Certification Authorities (CAs) to enable it to trust root CAs that are different from the root CA that signed its own certificate.

A user must manually choose to trust intermediate and end-entity certificates, or those certificates must be installed on the device as trusted in advance.

Root Certification Authorities (CAs) primarily sign certificates, and Intermediate Certification Authorities (CAs) primarily validate signatures.

A user must manually choose to trust a root Certification Authority (CA) certificate, or the root CA certificate must be installed on the device as trusted.

Answer:

DExplanation:

Public Key Infrastructure (PKI) relies on a trusted root Certification Authority (CA) to issue certificates. Devices and users must trust the root CA for the PKI to be effective. If a root CA certificate is not pre-installed or manually chosen to be trusted on a device, any certificates issued by that CA will not be inherently trusted by the device.

You are troubleshooting an authentication issue for HPE Aruba Networking switches that enforce 802.1X to a cluster of HPE Aruba Networking ClearPass Policy Manager (CPPMs). You know that CPPM is receiving and processing the authentication requests because the Aruba switches are showing Access-Rejects in their statistics. However, you cannot find the record for the Access-Rejects in CPPM Access Tracker.

What is something you can do to look for the records?

Options:

Go to the CPPM Event Viewer, because this is where RADIUS Access Rejects are stored.

Verify that you are logged in to the CPPM UI with read-write, not read-only, access.

Make sure that CPPM cluster settings are configured to show Access-Rejects.

Click Edit in Access Viewer and make sure that the correct servers are selected.

Answer:

AExplanation:

The scenario involves troubleshooting an 802.1X authentication issue on HPE Aruba Networking switches (likely AOS-CX switches) that use a cluster of HPE Aruba Networking ClearPass Policy Manager (CPPM) servers as the RADIUS server. The switches show Access-Rejects in their statistics, indicating that CPPM is receiving and processing the authentication requests but rejecting them. However, the records for these Access-Rejects are not visible in CPPM Access Tracker.

Access Tracker: Access Tracker (Monitoring > Live Monitoring > Access Tracker) in CPPM logs all authentication attempts, including successful (Access-Accept) and failed (Access-Reject) requests. If an Access-Reject is not visible in Access Tracker, it suggests that the request was processed at a lower level and not logged in Access Tracker, or there is a visibility issue (e.g., filtering, clustering).

Option A, "Go to the CPPM Event Viewer, because this is where RADIUS Access Rejects are stored," is correct. The Event Viewer (Monitoring > Event Viewer) in CPPM logs system-level events, including RADIUS-related events that might not appear in Access Tracker. For example, if the Access-Reject is due to a configuration issue (e.g., the switch’s IP address is not recognized as a Network Access Device, NAD, or the shared secret is incorrect), the request may be rejected before it is logged in Access Tracker, and the Event Viewer will capture this event (e.g., "RADIUS authentication attempt from unknown NAD"). Since the switches confirm that CPPM is sending Access-Rejects, the Event Viewer is a good place to look for more details.

Option B, "Verify that you are logged in to the CPPM UI with read-write, not read-only, access," is incorrect. Access Tracker visibility is not dependent on read-write vs. read-only access. Both types of accounts can view Access Tracker records, though read-only accounts cannot modify configurations. The issue is that the records are not appearing, not that the user lacks permission to see them.

Option C, "Make sure that CPPM cluster settings are configured to show Access-Rejects," is incorrect. In a CPPM cluster, Access Tracker records are synchronized across nodes, and there is no specific cluster setting to "show Access-Rejects." Access Tracker logs all authentication attempts by default, unless filtered out (e.g., by time range or search criteria), but the issue here is that the records are not appearing at all.

Option D, "Click Edit in Access Viewer and make sure that the correct servers are selected," is incorrect. Access Tracker (not "Access Viewer") does not have an "Edit" option to select servers. In a CPPM cluster, Access Tracker shows records from all nodes by default, and the user can filter by time, NAD, or other criteria, but the absence of records suggests a deeper issue (e.g., the request was rejected before logging in Access Tracker).

The HPE Aruba Networking ClearPass Policy Manager 6.11 User Guide states:

"If an Access-Reject is not visible in Access Tracker, it may indicate that the RADIUS request was rejected at a low level before being logged. The Event Viewer (Monitoring > Event Viewer) logs system-level events, including RADIUS Access-Rejects that do not appear in Access Tracker. For example, if the request is rejected due to an unknown NAD or shared secret mismatch, the Event Viewer will log an event like ‘RADIUS authentication attempt from unknown NAD,’ providing insight into the rejection." (Page 301, Troubleshooting RADIUS Issues Section)

Additionally, the HPE Aruba Networking AOS-CX 10.12 Security Guide notes:

"When troubleshooting 802.1X authentication issues, if the switch logs show Access-Rejects from the RADIUS server (e.g., ClearPass) but the records are not visible in Access Tracker, check the RADIUS server’s system logs. In ClearPass, the Event Viewer logs RADIUS Access-Rejects that may not appear in Access Tracker, such as those caused by NAD configuration issues." (Page 150, Troubleshooting 802.1X Authentication Section)

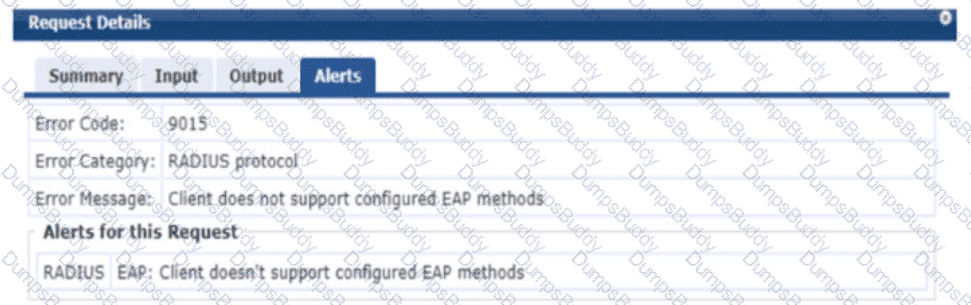

Refer to the exhibit.

A company has an HPE Aruba Networking Instant AP cluster. A Windows 10 client is attempting to connect to a WLAN that enforces WPA3-Enterprise with authentication to HPE Aruba Networking ClearPass Policy Manager (CPPM). CPPM is configured to require EAP-TLS. The client authentication fails. In the record for this client's authentication attempt on CPPM, you see this alert.

What is one thing that you check to resolve this issue?

Options:

Whether EAP-TLS is enabled in the AAA Profile settings for the WLAN on the IAP cluster

Whether the client has a valid certificate installed on it to let it support EAP-TLS

Whether EAP-TLS is enabled in the SSID Profile settings for the WLAN on the IAP cluster

Whether the client has a third-party 802.1X supplicant, as Windows 10 does not support EAP-TLS

Answer:

BExplanation:

The scenario involves an HPE Aruba Networking Instant AP (IAP) cluster with a WLAN configured for WPA3-Enterprise security, using HPE Aruba Networking ClearPass Policy Manager (CPPM) as the authentication server. CPPM is set to require EAP-TLS for authentication. A Windows 10 client attempts to connect but fails, and the CPPM Access Tracker shows an error: "Client does not support configured EAP methods," with the error code 9015 under the RADIUS protocol category.

EAP-TLS (Extensible Authentication Protocol - Transport Layer Security) is a certificate-based authentication method that requires both the client (supplicant) and the server (CPPM) to present valid certificates during the authentication process. The error message indicates that the client does not support the EAP method configured on CPPM (EAP-TLS), meaning the client is either not configured to use EAP-TLS or lacks the necessary components to perform EAP-TLS authentication.

Option B, "Whether the client has a valid certificate installed on it to let it support EAP-TLS," is correct. EAP-TLS requires the client to have a valid client certificate issued by a trusted Certificate Authority (CA) that CPPM trusts. If the Windows 10 client does not have a client certificate installed, or if the certificate is invalid (e.g., expired, not trusted by CPPM, or missing), the client cannot negotiate EAP-TLS, resulting in the error seen in CPPM. This is a common issue in EAP-TLS deployments, and checking the client’s certificate is a critical troubleshooting step.

Option A, "Whether EAP-TLS is enabled in the AAA Profile settings for the WLAN on the IAP cluster," is incorrect because the error indicates that CPPM received the authentication request and rejected it due to the client’s inability to support EAP-TLS. This suggests that the IAP cluster is correctly configured to use EAP-TLS (as the request reached CPPM with EAP-TLS as the method). The AAA profile on the IAP cluster is likely already set to use EAP-TLS, or the error would be different (e.g., a connectivity or configuration mismatch issue).

Option C, "Whether EAP-TLS is enabled in the SSID Profile settings for the WLAN on the IAP cluster," is incorrect for a similar reason. The SSID profile on the IAP cluster defines the security settings (e.g., WPA3-Enterprise), and the AAA profile specifies the EAP method. Since the authentication request reached CPPM with EAP-TLS, the IAP cluster is correctly configured to use EAP-TLS.

Option D, "Whether the client has a third-party 802.1X supplicant, as Windows 10 does not support EAP-TLS," is incorrect because Windows 10 natively supports EAP-TLS. The built-in Windows 10 802.1X supplicant (Windows WLAN AutoConfig service) supports EAP-TLS, provided a valid client certificate is installed. A third-party supplicant is not required.

The HPE Aruba Networking ClearPass Policy Manager 6.11 User Guide states:

"EAP-TLS requires both the client and the server to present a valid certificate during the authentication process. If the client does not have a valid certificate installed, or if the certificate is not trusted by ClearPass (e.g., the issuing CA is not in the ClearPass trust list), the authentication will fail with an error such as ‘Client does not support configured EAP methods’ (Error Code 9015). To resolve this, ensure that the client has a valid certificate installed and that the certificate’s issuing CA is trusted by ClearPass." (Page 295, EAP-TLS Troubleshooting Section)

Additionally, the HPE Aruba Networking Instant 8.11 User Guide notes:

"For WPA3-Enterprise with EAP-TLS, the client must have a valid client certificate installed to authenticate successfully. If the client lacks a certificate or the certificate is invalid, the authentication will fail, and ClearPass will log an error indicating that the client does not support the configured EAP method." (Page 189, WPA3-Enterprise Configuration Section)

You are deploying a new wireless solution with an HPE Aruba Networking Mobility Master (MM), Mobility Controllers (MCs), and campus APs (CAPs). The solution will include a WLAN that uses Tunnel for the forwarding mode and WPA3-Enterprise for the security option.

You have decided to assign the WLAN to VLAN 301, a new VLAN. A pair of core routing switches will act as the default router for wireless user traffic.

Which links need to carry VLAN 301?

Options:

Only links on the path between APs and the core routing switches

Only links on the path between APs and the MC

All links in the campus LAN to ensure seamless roaming

Only links between MC ports and the core routing switches

Answer:

DExplanation:

In an HPE Aruba Networking AOS-8 architecture with a Mobility Master (MM), Mobility Controllers (MCs), and campus APs (CAPs), the WLAN is configured to use Tunnel forwarding mode and WPA3-Enterprise security. In Tunnel mode, all user traffic from the APs is encapsulated in a GRE tunnel and sent to the MC, which then forwards the traffic to the appropriate VLAN. The WLAN is assigned to VLAN 301, and the core routing switches act as the default router for wireless user traffic.

Tunnel Forwarding Mode: In this mode, the AP does not directly place user traffic onto the wired network. Instead, the AP tunnels all user traffic to the MC over a GRE tunnel. The MC then decapsulates the traffic and places it onto the wired network in the specified VLAN (VLAN 301 in this case). This means the VLAN tagging for user traffic occurs at the MC, not at the AP.

VLAN 301 Assignment: Since the WLAN is assigned to VLAN 301, the MC will tag user traffic with VLAN 301 when forwarding it to the wired network. The core routing switches, acting as the default router, need to receive this traffic on VLAN 301 to route it appropriately.

Therefore, VLAN 301 needs to be carried on the links between the MC ports and the core routing switches, as this is where the MC forwards the user traffic after decapsulating it from the GRE tunnel.

Option A, "Only links on the path between APs and the core routing switches," is incorrect because, in Tunnel mode, the APs do not directly forward user traffic to the wired network. The traffic is tunneled to the MC, so the links between the APs and the core switches do not need to carry VLAN 301 for user traffic (though they may carry other VLANs for AP management).

Option B, "Only links on the path between APs and the MC," is incorrect for the same reason. The GRE tunnel between the AP and MC carries encapsulated user traffic, and VLAN 301 tagging occurs at the MC, not on the AP-to-MC link.

Option C, "All links in the campus LAN to ensure seamless roaming," is incorrect because VLAN 301 only needs to be present where the MC forwards user traffic to the wired network (i.e., between the MC and the core switches). Extending VLAN 301 to all links is unnecessary and could introduce security or scalability issues.

Option D, "Only links between MC ports and the core routing switches," is correct because the MC places user traffic onto VLAN 301 and forwards it to the core switches, which act as the default router.

The HPE Aruba Networking AOS-8 8.11 User Guide states:

"In Tunnel forwarding mode, the AP encapsulates all user traffic in a GRE tunnel and sends it to the Mobility Controller (MC). The MC decapsulates the traffic and forwards it to the wired network on the VLAN assigned to the WLAN. For example, if the WLAN is assigned to VLAN 301, the MC tags the user traffic with VLAN 301 and sends it out of its wired interface to the upstream switch. Therefore, the VLAN must be configured on the links between the MC and the upstream switch or router that acts as the default gateway for the VLAN." (Page 275, Tunnel Forwarding Mode Section)

Additionally, the HPE Aruba Networking Wireless LAN Design Guide notes:

"When using Tunnel mode, the VLAN assigned to the WLAN must be carried on the wired links between the Mobility Controller and the default router for the VLAN. The links between the APs and the MC do not need to carry the user VLAN, as all traffic is tunneled to the MC, which handles VLAN tagging." (Page 52, VLAN Configuration Section)

Why might devices use a Diffie-Hellman exchange?

Options:

to agree on a shared secret in a secure manner over an insecure network

to obtain a digital certificate signed by a trusted Certification Authority

to prove knowledge of a passphrase without transmitting the passphrase

to signal that they want to use asymmetric encryption for future communications

Answer:

AExplanation:

Devices use the Diffie-Hellman exchange to agree on a shared secret in a secure manner over an insecure network. The main purpose of this cryptographic protocol is to enable two parties to establish a shared secret over an unsecured communication channel. This shared secret can then be used to encrypt subsequent communications using a symmetric key cipher. The Diffie-Hellman exchange is particularly valuable because it allows the secure exchange of cryptographic keys over a public channel without the need for a prior shared secret. This protocol is a foundational element for many secure communications protocols, including SSL/TLS, which is used to secure connections on the internet. References to the Diffie-Hellman protocol and its uses can be found in standard cryptographic textbooks and documentation such as those from the Internet Engineering Task Force (IETF) and security protocol specifications.

What are the roles of 802.1X authenticators and authentication servers?

Options:

The authenticator stores the user account database, while the server stores access policies.

The authenticator supports only EAP, while the authentication server supports only RADIUS.

The authenticator is a RADIUS client and the authentication server is a RADIUS server.

The authenticator makes access decisions and the server communicates them to the supplicant.

Answer:

CExplanation: