- Home

- Juniper

- JNCIA-Cloud

- JN0-214

- Cloud Associate (JNCIA-Cloud) Questions and Answers

JN0-214 Cloud Associate (JNCIA-Cloud) Questions and Answers

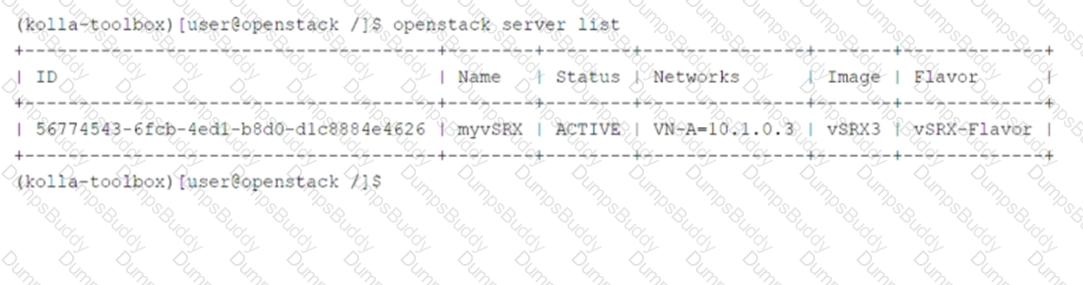

Click to the Exhibit button.

Referring to the exhibit, which two statements are correct? (Choose two.)

Options:

The myvSRX instance is using a default image.

The myvSRX instance is a part of a default network.

The myvSRX instance is created using a custom flavor.

The myvSRX instance is currently running.

Answer:

C, DExplanation:

Theopenstack server listcommand provides information about virtual machine (VM) instances in the OpenStack environment. Let’s analyze the exhibit and each statement:

Key Information from the Exhibit:

The output shows details about themyvSRXinstance:

Status: ACTIVE(indicating the instance is running).

Networks: VN-A-10.1.0.3(indicating the instance is part of a specific network).

Image: vSRX3(indicating the instance was created using a custom image).

Flavor: vSRX-Flavor(indicating the instance was created using a custom flavor).

Option Analysis:

A. The myvSRX instance is using a default image.

Incorrect:The image namevSRX3suggests that this is a custom image, not the default image provided by OpenStack.

B. The myvSRX instance is a part of a default network.

Incorrect:The network nameVN-A-10.1.0.3indicates that the instance is part of a specific network, not the default network.

C. The myvSRX instance is created using a custom flavor.

Correct:The flavor namevSRX-Flavorindicates that the instance was created using a custom flavor, which defines the CPU, RAM, and disk space properties.

D. The myvSRX instance is currently running.

Correct:TheACTIVEstatus confirms that the instance is currently running.

Why These Statements?

Custom Flavor:ThevSRX-Flavorname clearly indicates that a custom flavor was used to define the instance's resource allocation.

Running Instance:TheACTIVEstatus confirms that the instance is operational and available for use.

JNCIA Cloud References:

The JNCIA-Cloud certification emphasizes understanding OpenStack commands and outputs, including theopenstack server listcommand. Recognizing how images, flavors, and statuses are represented is essential for managing VM instances effectively.

For example, Juniper Contrail integrates with OpenStack Nova to provide advanced networking features for VMs, ensuring seamless operation based on their configurations.

You must provide tunneling in the overlay that supports multipath capabilities.

Which two protocols provide this function? (Choose two.)

Options:

MPLSoGRE

VXLAN

VPN

MPLSoUDP

Answer:

B, DExplanation:

In cloud networking, overlay networks are used to create virtualized networks that abstract the underlying physical infrastructure. To supportmultipath capabilities, certain protocols provide efficient tunneling mechanisms. Let’s analyze each option:

A. MPLSoGRE

Incorrect:MPLS over GRE (MPLSoGRE) is a tunneling protocol that encapsulates MPLS packets within GRE tunnels. While it supports MPLS traffic, it does not inherently provide multipath capabilities.

B. VXLAN

Correct:VXLAN (Virtual Extensible LAN) is an overlay protocol that encapsulates Layer 2 Ethernet frames within UDP packets. It supports multipath capabilities by leveraging the Equal-Cost Multi-Path (ECMP) routing in the underlay network. VXLAN is widely used in cloud environments for extending Layer 2 networks across data centers.

C. VPN

Incorrect:Virtual Private Networks (VPNs) are used to securely connect remote networks or users over public networks. They do not inherently provide multipath capabilities or overlay tunneling for virtual networks.

D. MPLSoUDP

Correct:MPLS over UDP (MPLSoUDP) is a tunneling protocol that encapsulates MPLS packets within UDP packets. Like VXLAN, it supports multipath capabilities by utilizing ECMP in the underlay network. MPLSoUDP is often used in service provider environments for scalable and flexible network architectures.

Why These Protocols?

VXLAN:Provides Layer 2 extension and supports multipath forwarding, making it ideal for large-scale cloud deployments.

MPLSoUDP:Combines the benefits of MPLS with UDP encapsulation, enabling efficient multipath routing in overlay networks.

JNCIA Cloud References:

The JNCIA-Cloud certification covers overlay networking protocols like VXLAN and MPLSoUDP as part of its curriculum on cloud architectures. Understanding these protocols is essential for designing scalable and resilient virtual networks.

For example, Juniper Contrail uses VXLAN to extend virtual networks across distributed environments, ensuring seamless communication and high availability.

You want to limit the memory, CPU, and network utilization of a set of processes running on a Linux host.

Which Linux feature would you configure in this scenario?

You want to limit the memory, CPU, and network utilization of a set of processes running on a Linux host.

Which Linux feature would you configure in this scenario?

Options:

virtual routing and forwarding instances

network namespaces

control groups

slicing

Answer:

CExplanation:

Linux provides several features to manage system resources and isolate processes. Let’s analyze each option:

A. virtual routing and forwarding instances

Incorrect:Virtual Routing and Forwarding (VRF) is a networking feature used to create multiple routing tables on a single router or host. It is unrelated to limiting memory, CPU, or network utilization for processes.

B. network namespaces

Incorrect:Network namespaces are used to isolate network resources (e.g., interfaces, routing tables) for processes. While they can help with network isolation, they do not directly limit memory or CPU usage.

C. control groups

Correct: Control Groups (cgroups)are a Linux kernel feature that allows you to limit, account for, and isolate the resource usage (CPU, memory, disk I/O, network) of a set of processes. cgroups are commonly used in containerization technologies like Docker and Kubernetes to enforce resource limits.

D. slicing

Incorrect:"Slicing" is not a recognized Linux feature for resource management. This term may refer to dividing resources in other contexts but is not relevant here.

Why Control Groups?

Resource Management:cgroups provide fine-grained control over memory, CPU, and network utilization, ensuring that processes do not exceed their allocated resources.

Containerization Foundation:cgroups are a core technology behind container runtimes likecontainerdand orchestration platforms like Kubernetes.

JNCIA Cloud References:

The JNCIA-Cloud certification covers Linux features like cgroups as part of its containerization curriculum. Understanding cgroups is essential for managing resource allocation in cloud environments.

For example, Juniper Contrail integrates with Kubernetes to manage containerized workloads, leveraging cgroups to enforce resource limits.

What are the two characteristics of the Network Functions Virtualization (NFV) framework? (Choose two.)

Options:

It implements virtualized tunnel endpoints

It decouples the network software from the hardware.

It implements virtualized network functions

It decouples the network control plane from the forwarding plane.

Answer:

B, CExplanation:

Network Functions Virtualization (NFV) is a framework designed to virtualize network services traditionally run on proprietary hardware. NFV aims to reduce costs, improve scalability, and increase flexibility by decoupling network functions from dedicated hardware appliances. Let’s analyze each statement:

A. It implements virtualized tunnel endpoints.

Incorrect:While NFV can support virtualized tunnel endpoints (e.g., VXLAN gateways), this is not a defining characteristic of the NFV framework. Tunneling protocols are typically associated with SDN or overlay networks rather than NFV itself.

B. It decouples the network software from the hardware.

Correct:One of the primary goals of NFV is to separate network functions (e.g., firewalls, load balancers, routers) from proprietary hardware. Instead, these functions are implemented as software running on standard servers or virtual machines.

C. It implements virtualized network functions.

Correct:NFV replaces traditional hardware-based network appliances with virtualized network functions (VNFs). Examples include virtual firewalls, virtual routers, and virtual load balancers. These VNFs run on commodity hardware and are managed through orchestration platforms.

D. It decouples the network control plane from the forwarding plane.

Incorrect:Decoupling the control plane from the forwarding plane is a characteristic of Software-Defined Networking (SDN), not NFV. While NFV and SDN are complementary technologies, they serve different purposes. NFV focuses on virtualizing network functions, while SDN focuses on programmable network control.

JNCIA Cloud References:

The JNCIA-Cloud certification covers NFV as part of its discussion on cloud architectures and virtualization. NFV is particularly relevant in modern cloud environments because it enables flexible and scalable deployment of network services without reliance on specialized hardware.

For example, Juniper Contrail integrates with NFV frameworks to deploy and manage VNFs, enabling service providers to deliver network services efficiently and cost-effectively.

Which OpenStack object enables multitenancy?

Options:

role

flavor

image

project

Answer:

DExplanation:

Multitenancy is a key feature of OpenStack, enabling multiple users or organizations to share cloud resources while maintaining isolation. Let’s analyze each option:

A. role

Incorrect:Aroledefines permissions and access levels for users within a project. While roles are important for managing user privileges, they do not directly enable multitenancy.

B. flavor

Incorrect:Aflavorspecifies the compute, memory, and storage capacity of a VM instance. It is unrelated to enabling multitenancy.

C. image

Incorrect:Animageis a template used to create VM instances. While images are essential for deploying VMs, they do not enable multitenancy.

D. project

Correct:Aproject(also known as a tenant) is the primary mechanism for enabling multitenancy in OpenStack. Each project represents an isolated environment where resources (e.g., VMs, networks, storage) are provisioned and managed independently.

Why Project?

Isolation:Projects ensure that resources allocated to one tenant are isolated from others, enabling secure and efficient resource sharing.

Resource Management:Each project has its own quotas, users, and resources, making it the foundation of multitenancy in OpenStack.

JNCIA Cloud References:

The JNCIA-Cloud certification emphasizes understanding OpenStack’s multitenancy model, including the role of projects. Recognizing how projects enable resource isolation is essential for managing shared cloud environments.

For example, Juniper Contrail integrates with OpenStack Keystone to enforce multitenancy and network segmentation for projects.

Which two tools are used to deploy a Kubernetes environment for testing and development purposes? (Choose two.)

Options:

OpenStack

kind

oc

minikube

Answer:

B, DExplanation:

Kubernetes is a popular container orchestration platform used for deploying and managing containerized applications. Several tools are available for setting up Kubernetes environments for testing and development purposes. Let’s analyze each option:

A. OpenStack

Incorrect: OpenStack is an open-source cloud computing platform used for managing infrastructure resources (e.g., compute, storage, networking). It is not specifically designed for deploying Kubernetes environments.

B. kind

Correct: kind (Kubernetes IN Docker) is a tool for running local Kubernetes clusters using Docker containers as nodes. It is lightweight and ideal for testing and development purposes.

C. oc

Incorrect: oc is the command-line interface (CLI) for OpenShift, a Kubernetes-based container platform. While OpenShift can be used to deploy Kubernetes environments, oc itself is not a tool for setting up standalone Kubernetes clusters.

D. minikube

Correct: minikube is a tool for running a single-node Kubernetes cluster locally on your machine. It is widely used for testing and development due to its simplicity and ease of setup.

Why These Tools?

kind: Ideal for simulating multi-node Kubernetes clusters in a lightweight environment.

minikube: Perfect for beginners and developers who need a simple, single-node Kubernetes cluster for experimentation.

JNCIA Cloud References:

The JNCIA-Cloud certification covers Kubernetes as part of its container orchestration curriculum. Tools like kind and minikube are essential for learning and experimenting with Kubernetes in local environments.

For example, Juniper Contrail integrates with Kubernetes to provide advanced networking and security features for containerized workloads. Proficiency with Kubernetes tools ensures effective operation and troubleshooting.

What are two Kubernetes worker node components? (Choose two.)

Options:

kube-apiserver

kubelet

kube-scheduler

kube-proxy

Answer:

B, DExplanation:

Kubernetes worker nodes are responsible for running containerized applications and managing the workloads assigned to them. Each worker node contains several key components that enable it to function within a Kubernetes cluster. Let’s analyze each option:

A. kube-apiserver

Incorrect: The kube-apiserver is a control plane component, not a worker node component. It serves as the front-end for the Kubernetes API, handling communication between the control plane and worker nodes.

B. kubelet

Correct: The kubelet is a critical worker node component. It ensures that containers are running in the desired state by interacting with the container runtime (e.g., containerd). It communicates with the control plane to receive instructions and report the status of pods.

C. kube-scheduler

Incorrect: The kube-scheduler is a control plane component responsible for assigning pods to worker nodes based on resource availability and other constraints. It does not run on worker nodes.

D. kube-proxy

Correct: The kube-proxy is another essential worker node component. It manages network communication for services and pods by implementing load balancing and routing rules. It ensures that traffic is correctly forwarded to the appropriate pods.

Why These Components?

kubelet: Ensures that containers are running as expected and maintains the desired state of pods.

kube-proxy: Handles networking and enables communication between services and pods within the cluster.

JNCIA Cloud References:

The JNCIA-Cloud certification covers Kubernetes architecture, including the roles of worker node components. Understanding the functions of kubelet and kube-proxy is crucial for managing Kubernetes clusters and troubleshooting issues.

For example, Juniper Contrail integrates with Kubernetes to provide advanced networking and security features. Proficiency with worker node components ensures efficient operation of containerized workloads.

You must install a basic Kubernetes cluster.

Which tool would you use in this situation?

Options:

kubeadm

kubectl apply

kubectl create

dashboard

Answer:

AExplanation:

To install a basic Kubernetes cluster, you need a tool that simplifies the process of bootstrapping and configuring the cluster. Let’s analyze each option:

A. kubeadm

Correct:

kubeadmis a command-line tool specifically designed to bootstrap a Kubernetes cluster. It automates the process of setting up the control plane and worker nodes, making it the most suitable choice for installing a basic Kubernetes cluster.

B. kubectl apply

Incorrect:

kubectl applyis used to deploy resources (e.g., pods, services) into an existing Kubernetes cluster by applying YAML or JSON manifests. It does not bootstrap or install a new cluster.

C. kubectl create

Incorrect:

kubectl createis another Kubernetes CLI command used to create resources in an existing cluster. Likekubectl apply, it does not handle cluster installation.

D. dashboard

Incorrect:

The Kubernetesdashboardis a web-based UI for managing and monitoring a Kubernetes cluster. It requires an already-installed cluster and cannot be used to install one.

Why kubeadm?

Cluster Bootstrapping: kubeadmprovides a simple and standardized way to initialize a Kubernetes cluster, including setting up the control plane and joining worker nodes.

Flexibility:While it creates a basic cluster, it allows for customization and integration with additional tools like CNI plugins.

JNCIA Cloud References:

The JNCIA-Cloud certification covers Kubernetes installation methods, includingkubeadm. Understanding how to usekubeadmis essential for deploying and managing Kubernetes clusters effectively.

For example, Juniper Contrail integrates with Kubernetes clusters created usingkubeadmto provide advanced networking and security features.

You are asked to deploy a cloud solution for a customer that requires strict control over their resources and data. The deployment must allow the customer to implement and manage precise security controls to protect their data.

Which cloud deployment model should be used in this situation?

Options:

private cloud

hybrid cloud

dynamic cloud

public cloud

Answer:

AExplanation:

Cloud deployment models define how cloud resources are provisioned and managed. The four main models are:

Public Cloud:Resources are shared among multiple organizations and managed by a third-party provider. Examples include AWS, Microsoft Azure, and Google Cloud Platform.

Private Cloud:Resources are dedicated to a single organization and can be hosted on-premises or by a third-party provider. Private clouds offer greater control over security, compliance, and resource allocation.

Hybrid Cloud:Combines public and private clouds, allowing data and applications to move between them. This model provides flexibility and optimization of resources.

Dynamic Cloud:Not a standard cloud deployment model. It may refer to the dynamic scaling capabilities of cloud environments but is not a recognized category.

In this scenario, the customer requires strict control over their resources and data, as well as the ability to implement and manage precise security controls. Aprivate cloudis the most suitable deployment model because:

Dedicated Resources:The infrastructure is exclusively used by the organization, ensuring isolation and control.

Customizable Security:The organization can implement its own security policies, encryption mechanisms, and compliance standards.

On-Premises Option:If hosted internally, the organization retains full physical control over the data center and hardware.

Why Not Other Options?

Public Cloud:Shared infrastructure means less control over security and compliance. While public clouds offer robust security features, they may not meet the strict requirements of the customer.

Hybrid Cloud:While hybrid clouds combine the benefits of public and private clouds, they introduce complexity and may not provide the level of control the customer desires.

Dynamic Cloud:Not a valid deployment model.

JNCIA Cloud References:

The JNCIA-Cloud certification covers cloud deployment models and their use cases. Private clouds are highlighted as ideal for organizations with stringent security and compliance requirements, such as financial institutions, healthcare providers, and government agencies.

For example, Juniper Contrail supports private cloud deployments by providing advanced networking and security features, enabling organizations to build and manage secure, isolated cloud environments.

Which command would you use to see which VMs are running on your KVM device?

Options:

virt-install

virsh net-list

virsh list

VBoxManage list runningvms

Answer:

CExplanation:

KVM (Kernel-based Virtual Machine) is a popular open-source virtualization technology that allows you to run virtual machines (VMs) on Linux systems. Thevirshcommand-line tool is used to manage KVM VMs. Let’s analyze each option:

A. virt-install

Incorrect:Thevirt-installcommand is used to create and provision new virtual machines. It is not used to list running VMs.

B. virsh net-list

Incorrect:Thevirsh net-listcommand lists virtual networks configured in the KVM environment. It does not display information about running VMs.

C. virsh list

Correct:Thevirsh listcommand displays the status of virtual machines managed by the KVM hypervisor. By default, it shows only running VMs. You can use the--allflag to include stopped VMs in the output.

D. VBoxManage list runningvms

Incorrect:TheVBoxManagecommand is used with Oracle VirtualBox, not KVM. It is unrelated to KVM virtualization.

Why virsh list?

Purpose-Built for KVM: virshis the standard tool for managing KVM virtual machines, andvirsh listis specifically designed to show the status of running VMs.

Simplicity:The command is straightforward and provides the required information without additional complexity.

JNCIA Cloud References:

The JNCIA-Cloud certification emphasizes understanding virtualization technologies, including KVM. Managing virtual machines using tools likevirshis a fundamental skill for operating virtualized environments.

For example, Juniper Contrail supports integration with KVM hypervisors, enabling the deployment and management of virtualized network functions (VNFs). Proficiency with KVM tools ensures efficient management of virtualized infrastructure.

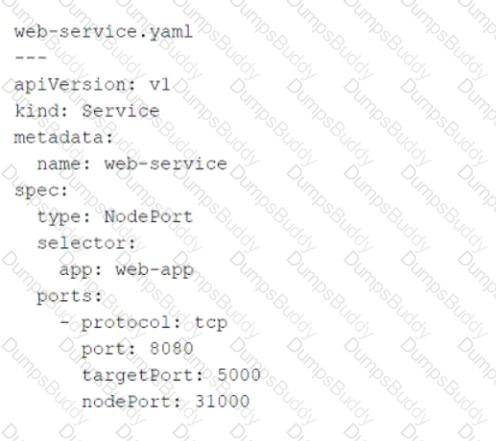

Click the Exhibit button.

Referring to the exhibit, which port number would external users use to access the WEB application?

Options:

80

8080

31000

5000

Answer:

CExplanation:

The YAML file provided in the exhibit defines a KubernetesServiceobject of typeNodePort. Let’s break down the key components of the configuration and analyze how external users access the WEB application:

Key Fields in the YAML File:

type: NodePort:

This specifies that the service is exposed on a static port on each node in the cluster. External users can access the service using the node's IP address and the assignednodePort.

port: 8080:

This is the port on which the service is exposed internally within the Kubernetes cluster. Other services or pods within the cluster can communicate with this service using port8080.

targetPort: 5000:

This is the port on which the actual application (WEB application) is running inside the pod. The service forwards traffic fromport: 8080totargetPort: 5000.

nodePort: 31000:

This is the port on the node (host machine) where the service is exposed externally. External users will use this port to access the WEB application.

How External Users Access the WEB Application:

External users access the WEB application using the node's IP address and thenodePortvalue (31000).

The Kubernetes service listens on this port and forwards incoming traffic to the appropriate pods running the WEB application.

Why Not Other Options?

A. 80:Port80is commonly used for HTTP traffic, but it is not specified in the YAML file. The service does not expose port80externally.

B. 8080:Port8080is the internal port used within the Kubernetes cluster. It is not the port exposed to external users.

D. 5000:Port5000is the target port where the application runs inside the pod. It is not directly accessible to external users.

Why 31000?

NodePort Service Type:TheNodePortservice type exposes the application on a high-numbered port (default range: 30000–32767) on each node in the cluster.

External Accessibility:External users must use thenodePortvalue (31000) along with the node's IP address to access the WEB application.

JNCIA Cloud References:

The JNCIA-Cloud certification covers Kubernetes networking concepts, including service types likeClusterIP,NodePort, andLoadBalancer. Understanding howNodePortservices work is essential for exposing applications to external users in Kubernetes environments.

For example, Juniper Contrail integrates with Kubernetes to provide advanced networking features, such as load balancing and network segmentation, for services like the one described in the exhibit.

Your organization has legacy virtual machine workloads that need to be managed within a Kubernetes deployment.

Which Kubernetes add-on would be used to satisfy this requirement?

Options:

ADOT

Canal

KubeVirt

Romana

Answer:

CExplanation:

Kubernetes is designed primarily for managing containerized workloads, but it can also support legacy virtual machine (VM) workloads through specific add-ons. Let’s analyze each option:

A. ADOT

Incorrect: The AWS Distro for OpenTelemetry (ADOT) is a tool for collecting and exporting telemetry data (metrics, logs, traces). It is unrelated to running VMs in Kubernetes.

B. Canal

Incorrect: Canal is a networking solution that combines Flannel and Calico to provide overlay networking and network policy enforcement in Kubernetes. It does not support VM workloads.

C. KubeVirt

Correct: KubeVirt is a Kubernetes add-on that enables the management of virtual machines alongside containers in a Kubernetes cluster. It allows organizations to run legacy VM workloads while leveraging Kubernetes for orchestration.

D. Romana

Incorrect: Romana is a network policy engine for Kubernetes that provides security and segmentation. It does not support VM workloads.

Why KubeVirt?

VM Support in Kubernetes: KubeVirt extends Kubernetes to manage both containers and VMs, enabling organizations to transition legacy workloads to a Kubernetes environment.

Unified Orchestration: By integrating VMs into Kubernetes, KubeVirt simplifies the management of hybrid workloads.

JNCIA Cloud References:

The JNCIA-Cloud certification covers Kubernetes extensions like KubeVirt as part of its curriculum on cloud-native architectures. Understanding how to integrate legacy workloads into Kubernetes is essential for modernizing IT infrastructure.

For example, Juniper Contrail integrates with Kubernetes and KubeVirt to provide networking and security for hybrid workloads.

Which Kubernetes component guarantees the availability of ReplicaSet pods on one or more nodes?

Options:

kube-proxy

kube-scheduler

kube controller

kubelet

Answer:

CExplanation:

Kubernetes components work together to ensure the availability and proper functioning of resources like ReplicaSets. Let’s analyze each option:

A. kube-proxy

Incorrect:Thekube-proxymanages network communication for services and pods by implementing load balancing and routing rules. It does not guarantee the availability of ReplicaSet pods.

B. kube-scheduler

Incorrect:Thekube-scheduleris responsible for assigning pods to nodes based on resource availability and other constraints. While it plays a role in pod placement, it does not ensure the availability of ReplicaSet pods.

C. kube controller

Correct:Thekube controller(specifically the ReplicaSet controller) ensures that the desired number of pods specified in a ReplicaSet are running at all times. If a pod crashes or is deleted, the controller creates a new one to maintain the desired state.

D. kubelet

Incorrect:Thekubeletensures that containers are running as expected on a node but does not manage the overall availability of ReplicaSet pods across the cluster.

Why Kube Controller?

ReplicaSet Management:The ReplicaSet controller within the kube controller manager ensures that the specified number of pod replicas are always available.

Self-Healing:If a pod fails or is deleted, the controller automatically creates a new pod to maintain the desired state.

JNCIA Cloud References:

The JNCIA-Cloud certification covers Kubernetes control plane components, including the kube controller. Understanding the role of the kube controller is essential for managing the availability and scalability of Kubernetes resources.

For example, Juniper Contrail integrates with Kubernetes to provide advanced networking and security features, relying on the kube controller to maintain the desired state of ReplicaSets.

Which two statements are correct about Network Functions Virtualization (NFV)? (Choose two.)

Options:

the NFV framework explains how VNFs fits into the whole solution.

The NFV Infrastructure (NFVI) is a component of NFV.

The NFV Infrastructure (NFVI) is not a component of NFV.

The NFV framework is defined by the W3C.

Answer:

A, BExplanation:

Network Functions Virtualization (NFV) is a framework designed to virtualize network services traditionally run on proprietary hardware. It decouples network functions from dedicated hardware appliances and implements them as software running on standard servers or virtual machines. Let’s analyze each statement:

A. The NFV framework explains how VNFs fit into the whole solution.

Correct:The NFV framework provides a structured approach to deploying and managing Virtualized Network Functions (VNFs). It defines how VNFs interact with other components, such as the NFV Infrastructure (NFVI), Management and Orchestration (MANO), and the underlying hardware.

B. The NFV Infrastructure (NFVI) is a component of NFV.

Correct:The NFV Infrastructure (NFVI) is a critical part of the NFV architecture. It includes the physical and virtual resources (e.g., compute, storage, networking) that host and support VNFs. NFVI acts as the foundation for deploying and running virtualized network functions.

C. The NFV Infrastructure (NFVI) is not a component of NFV.

Incorrect:This statement contradicts the NFV architecture. NFVI is indeed a core component of NFV, providing the necessary infrastructure for VNFs.

D. The NFV framework is defined by the W3C.

Incorrect:The NFV framework is defined by the European Telecommunications Standards Institute (ETSI), not the W3C. ETSI’s NFV Industry Specification Group (ISG) established the standards and architecture for NFV.

Why These Answers?

Framework Explanation:The NFV framework provides a comprehensive view of how VNFs integrate into the overall solution, ensuring scalability and flexibility.

NFVI Role:NFVI is essential for hosting and supporting VNFs, making it a fundamental part of the NFV architecture.

JNCIA Cloud References:

The JNCIA-Cloud certification covers NFV as part of its cloud infrastructure curriculum. Understanding the NFV framework and its components is crucial for deploying and managing virtualized network functions in cloud environments.

For example, Juniper Contrail integrates with NFV frameworks to deploy and manage VNFs, enabling service providers to deliver network services efficiently and cost-effectively.

Which virtualization method requires less duplication of hardware resources?

Options:

OS-level virtualization

hardware-assisted virtualization

full virtualization

paravirtualization

Answer:

AExplanation:

Virtualization methods differ in how they utilize hardware resources. Let’s analyze each option:

A. OS-level virtualization

Correct: OS-level virtualization (e.g., containers) uses the host operating system’s kernel to run isolated user-space instances (containers). Since containers share the host OSkernel, there is less duplication of hardware resources compared to other virtualization methods.

B. hardware-assisted virtualization

Incorrect: Hardware-assisted virtualization (e.g., Intel VT-x, AMD-V) enables full virtual machines (VMs) to run on physical hardware. Each VM includes its own operating system, leading to duplication of resources like memory and CPU.

C. full virtualization

Incorrect: Full virtualization involves running a complete guest operating system on top of a hypervisor. Each VM requires its own OS, resulting in significant resource duplication.

D. paravirtualization

Incorrect: Paravirtualization modifies the guest operating system to communicate directly with the hypervisor. While it reduces some overhead compared to full virtualization, it still requires separate operating systems for each VM, leading to resource duplication.

Why OS-Level Virtualization?

Resource Efficiency: Containers share the host OS kernel, eliminating the need for multiple operating systems and reducing resource duplication.

Lightweight: Containers are faster to start and consume fewer resources compared to VMs.

JNCIA Cloud References:

The JNCIA-Cloud certification emphasizes understanding virtualization technologies, including OS-level virtualization. Containers are a key component of modern cloud-native architectures due to their efficiency and scalability.

For example, Juniper Contrail integrates with container orchestration platforms like Kubernetes to manage OS-level virtualization workloads efficiently.

Which two statements about Kubernetes are correct? (Choose two.)

Options:

Kubernetes is compatible with the container open container runtime.

Kubernetes requires the Docker daemon to run Docker containers.

A container is the smallest unit of computing that you can manage with Kubernetes.

A Kubernetes cluster must contain at least one control plane node.

Answer:

A, CExplanation:

Kubernetes is an open-source container orchestration platform that automates the deployment, scaling, and management of containerized applications. Let’s analyze each statement:

A. Kubernetes is compatible with the container open container runtime.

Correct: Kubernetes supports the Open Container Initiative (OCI) runtime standards, which ensure compatibility with various container runtimes like containerd, cri-o, and others. This flexibility allows Kubernetes to work with different container engines beyond just Docker.

B. Kubernetes requires the Docker daemon to run Docker containers.

Incorrect: While Kubernetes historically used Docker as its default container runtime, it no longer depends on the Docker daemon. Instead, Kubernetes uses the Container Runtime Interface (CRI) to interact with container runtimes like containerd or cri-o. Docker’s runtime has been replaced by containerd in most modern Kubernetes deployments.

C. A container is the smallest unit of computing that you can manage with Kubernetes.

Correct: In Kubernetes, a container represents the smallest deployable unit of computing. Containers encapsulate application code, dependencies, and configurations. Kubernetesmanages containers through higher-level abstractions like Pods, which are groups of one or more containers.

D. A Kubernetes cluster must contain at least one control plane node.

Incorrect: While a Kubernetes cluster typically requires at least one control plane node to manage the cluster, this statement is incomplete. A functional Kubernetes cluster also requires at least one worker node to run application workloads. Both control plane and worker nodes are essential for a fully operational cluster.

Why These Answers?

Compatibility with OCI Runtimes: Kubernetes’ support for OCI-compliant runtimes ensures flexibility and avoids vendor lock-in.

Containers as Smallest Unit: Understanding that containers are the fundamental building blocks of Kubernetes is crucial for designing and managing applications in a Kubernetes environment.

JNCIA Cloud References:

The JNCIA-Cloud certification covers Kubernetes as part of its container orchestration curriculum. Understanding Kubernetes architecture, compatibility, and core concepts is essential for deploying and managing containerized applications in cloud environments.

For example, Juniper Contrail integrates with Kubernetes to provide advanced networking and security features for containerized workloads. Proficiency with Kubernetes ensures seamless operation of cloud-native applications.