- Home

- Snowflake

- SnowPro Advanced: Architect

- ARA-C01

- SnowPro Advanced: Architect Certification Exam Questions and Answers

ARA-C01 SnowPro Advanced: Architect Certification Exam Questions and Answers

Which statements describe characteristics of the use of materialized views in Snowflake? (Choose two.)

Options:

They can include ORDER BY clauses.

They cannot include nested subqueries.

They can include context functions, such as CURRENT_TIME().

They can support MIN and MAX aggregates.

They can support inner joins, but not outer joins.

Answer:

B, DExplanation:

According to the Snowflake documentation, materialized views have some limitations on the query specification that defines them. One of these limitations is that they cannot include nested subqueries, such as subqueries in the FROM clause or scalar subqueries in the SELECT list. Another limitation is that they cannot include ORDER BY clauses, context functions (such as CURRENT_TIME()), or outer joins. However, materialized views can support MIN and MAX aggregates, as well as other aggregate functions, such as SUM, COUNT, and AVG.

Limitations on Creating Materialized Views | Snowflake Documentation

Working with Materialized Views | Snowflake Documentation

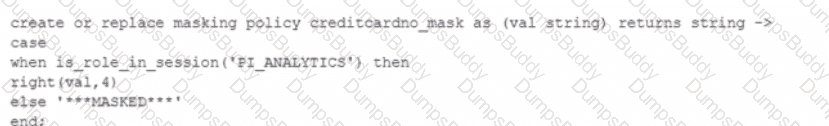

A Snowflake Architect created a new data share and would like to verify that only specific records in secure views are visible within the data share by the consumers.

What is the recommended way to validate data accessibility by the consumers?

Options:

Create reader accounts as shown below and impersonate the consumers by logging in with their credentials.create managed account reader_acctl admin_name = userl , adroin_password ■ 'Sdfed43da!44T , type = reader;

Create a row access policy as shown below and assign it to the data share.create or replace row access policy rap_acct as (acct_id varchar) returns boolean -> case when 'acctl_role' = current_role() then true else false end;

Set the session parameter called SIMULATED_DATA_SHARING_C0NSUMER as shown below in order to impersonate the consumer accounts.alter session set simulated_data_sharing_consumer - 'Consumer Acctl*

Alter the share settings as shown below, in order to impersonate a specific consumer account.alter share sales share set accounts = 'Consumerl’ share restrictions = true

Answer:

CExplanation:

The SIMULATED_DATA_SHARING_CONSUMER session parameter allows a data provider to simulate the data access of a consumer account without creating a reader account or logging in with the consumer credentials. This parameter can be used to validate the data accessibility by the consumers in a data share, especially when using secure views or secure UDFs that filter data based on the current account or role. By setting this parameter to the name of a consumer account, the data provider can see the same data as the consumer would see when querying the shared database. This is a convenient and efficient way to test the data sharing functionality and ensure that only the intended data is visible to the consumers.

An Architect with the ORGADMIN role wants to change a Snowflake account from an Enterprise edition to a Business Critical edition.

How should this be accomplished?

Options:

Run an ALTER ACCOUNT command and create a tag of EDITION and set the tag to Business Critical.

Use the account's ACCOUNTADMIN role to change the edition.

Failover to a new account in the same region and specify the new account's edition upon creation.

Contact Snowflake Support and request that the account's edition be changed.

Answer:

DExplanation:

To change the edition of a Snowflake account, an organization administrator (ORGADMIN) cannot directly alter the account settings through SQL commands or the Snowflake interface. The proper procedure is to contact Snowflake Support to request an edition change for the account. This ensures that the change is managed correctly and aligns with Snowflake’s operational protocols.

What is a key consideration when setting up search optimization service for a table?

Options:

Search optimization service works best with a column that has a minimum of 100 K distinct values.

Search optimization service can significantly improve query performance on partitioned external tables.

Search optimization service can help to optimize storage usage by compressing the data into a GZIP format.

The table must be clustered with a key having multiple columns for effective search optimization.

Answer:

AExplanation:

A. The Search Optimization Service is designed to accelerate the performance of queries that use filters on large tables. One of the key considerations for its effectiveness is using it with tables where the columns used in the filter conditions have a high number of distinct values, typically in the hundreds of thousands or more. This is because the service creates a map-reduce-like index on the column to speed up queries that use point lookups or range scans on that column. The more unique values there are, the more effective the index is at narrowing down the search space.

In a managed access schema, what are characteristics of the roles that can manage object privileges? (Select TWO).

Options:

Users with the SYSADMIN role can grant object privileges in a managed access schema.

Users with the SECURITYADMIN role or higher, can grant object privileges in a managed access schema.

Users who are database owners can grant object privileges in a managed access schema.

Users who are schema owners can grant object privileges in a managed access schema.

Users who are object owners can grant object privileges in a managed access schema.

Answer:

B, DExplanation:

In a managed access schema, the privilege management is centralized with the schema owner, who has the authority to grant object privileges within the schema. Additionally, the SECURITYADMIN role has the capability to manage object grants globally, which includes within managed access schemas. Other roles, such as SYSADMIN or database owners, do not inherently have this privilege unless explicitly granted.

A global company with operations in North America, Europe, and Asia needs to secure its Snowflake environment with a focus on data privacy, secure connectivity, and access control. The company uses AWS and must ensure secure data transfers that comply with regional regulations.

How can these requirements be met? (Select TWO).

Options:

Configure SAML 2.0 to authenticate users in the Snowflake environment.

Configure detailed logging and monitoring of all network traffic using Snowflake native capabilities.

Use public endpoints with SSL encryption to secure data transfers.

Configure network policies to restrict access based on corporate IP ranges.

Use AWS PrivateLink for private connectivity between Snowflake and AWS VPCs.

Answer:

D, EExplanation:

For global enterprises handling sensitive data, Snowflake architects must design for both secure access control and secure network connectivity. Network policies allow administrators to restrict access to Snowflake accounts based on approved IP address ranges, ensuring that only corporate offices or trusted networks can connect (Answer D). This is a core Snowflake security control and is frequently tested in the SnowPro Architect exam.

AWS PrivateLink provides private, non-internet-based connectivity between Snowflake and customer AWS VPCs (Answer E). This ensures that data traffic does not traverse the public internet, which is critical for meeting regional regulatory and compliance requirements related to data privacy and sovereignty. PrivateLink also simplifies security posture by reducing exposure to public endpoints.

While SAML authentication is important for identity management, it does not address secure data transport. Public endpoints with SSL are secure but do not meet stricter regulatory requirements when private connectivity is mandated. Snowflake does not provide detailed packet-level network traffic monitoring. Together, network policies and PrivateLink provide strong, compliant, and regionally secure Snowflake architectures.

=========

QUESTION NO: 17 [Cost Control and Resource Management]

An Architect plans to stream data using the Snowflake Connector for Kafka in Snowpipe.

What setting will optimize costs?

A. Set buffer.flush.time = 1.

B. Set buffer.count.records = 1.

C. Set buffer.size.bytes = 10 MB.

D. Maximize the number of micro-partitions.

Answer: C

When using the Snowflake Connector for Kafka with Snowpipe, cost efficiency depends on batching data into appropriately sized files before ingestion. Snowflake charges Snowpipe costs based on the number of files ingested and compute used for loading. Very small files significantly increase overhead and cost.

Setting buffer.size.bytes = 10 MB allows the connector to batch records into reasonably sized files before flushing them to Snowflake (Answer C). This strikes a balance between ingestion latency and cost efficiency and aligns with Snowflake best practices for streaming ingestion. Extremely small buffer sizes or very frequent flush intervals (such as 1 record or 1 second) lead to excessive file creation and higher Snowpipe costs.

Maximizing micro-partitions is not configurable directly and is counterproductive for cost and performance. For SnowPro Architect candidates, this question emphasizes the importance of batching and file sizing strategies when designing streaming ingestion pipelines with Kafka and Snowpipe.

=========

QUESTION NO: 18 [Security and Access Management]

An Architect is creating a new database role and is considering using the OR REPLACE keywords in the CREATE DATABASE ROLE command.

What should be considered before using OR REPLACE?

A. The dropped database role cannot be recreated.

B. Recreating a database role drops it from any shares it is granted to.

C. The OR REPLACE keywords are unsupported for database roles.

D. The database role can only be dropped by a role with MANAGE GRANTS privilege.

Answer: B

Using CREATE OR REPLACE DATABASE ROLE in Snowflake drops and recreates the role if it already exists. While this can simplify deployment scripts, it has important side effects that architects must understand. When a database role is dropped and recreated, all grants associated with that role—including grants to shares—are removed (Answer B). This can unintentionally break data sharing configurations or downstream access.

Although the role itself can be recreated, the loss of grants requires manual remediation, increasing operational risk. The OR REPLACE syntax is supported for database roles, and while privilege requirements matter, they are not the key risk being tested here.

For SnowPro Architect candidates, this question reinforces best practices around role lifecycle management and governance. In production environments, replacing roles should be done cautiously, with a clear understanding of grant dependencies and the potential impact on security and data sharing.

=========

QUESTION NO: 19 [Performance Optimization and Monitoring]

A large table is accessed by multiple teams using point-lookup queries on different columns. Query performance is poor.

What can be done to ensure good performance for all teams?

A. Build multiple materialized views with cluster keys on each column.

B. Build materialized views and allow Snowflake to replace the base table.

C. Use the search optimization service on the underlying table.

D. Create clustering keys using a combination of all lookup columns.

Answer: C

When multiple teams perform selective point-lookups on different columns of the same large table, traditional clustering is not effective because a table can have only one clustering key. Creating a composite clustering key across many columns often leads to poor clustering depth and limited pruning benefits.

The Search Optimization Service (SOS) is specifically designed for this scenario (Answer C). It enables fast point-lookups across multiple columns without requiring table reclustering. SOS maintains additional search access paths that accelerate highly selective predicates and is ideal when queries vary across many lookup columns.

Materialized views introduce additional storage, maintenance overhead, and operational complexity, and Snowflake does not automatically replace base tables with materialized views. This question tests an architect’s ability to choose the right optimization technique for multi-access-path workloads, a core SnowPro Architect competency.

=========

QUESTION NO: 20 [Snowflake Ecosystem and Integrations]

What is a characteristic of loading data into Snowflake using the Snowflake Connector for Kafka?

A. The Connector only works in AWS regions.

B. The Connector works with all file formats.

C. The Connector creates and manages its own stage, file format, and pipe objects.

D. Loads using the Connector have lower latency than Snowpipe and ingest data in real time.

Answer: C

The Snowflake Connector for Kafka simplifies streaming ingestion by automatically managing Snowflake objects required for ingestion. It creates and manages internal stages, file formats, and Snowpipe pipes without requiring manual configuration (Answer C). This reduces operational overhead and simplifies deployment.

The connector is cloud-agnostic and works across supported Snowflake clouds, not just AWS. It supports a defined set of formats (such as JSON and Avro), not all possible formats. While the connector enables near real-time ingestion, it still uses Snowpipe under the hood and does not guarantee lower latency than Snowpipe Streaming.

For SnowPro Architect candidates, this question emphasizes understanding how Snowflake-managed connectors abstract infrastructure details while still relying on Snowflake-native ingestion services.

Which command will create a schema without Fail-safe and will restrict object owners from passing on access to other users?

Options:

create schema EDW.ACCOUNTING WITH MANAGED ACCESS;

create schema EDW.ACCOUNTING WITH MANAGED ACCESS DATA_RETENTION_TIME_IN_DAYS - 7;

create TRANSIENT schema EDW.ACCOUNTING WITH MANAGED ACCESS DATA_RETENTION_TIME_IN_DAYS = 1;

create TRANSIENT schema EDW.ACCOUNTING WITH MANAGED ACCESS DATA_RETENTION_TIME_IN_DAYS = 7;

Answer:

DExplanation:

A transient schema in Snowflake is designed without a Fail-safe period, meaning it does not incur additional storage costs once it leaves Time Travel, and it is not protected by Fail-safe in the event of a data loss. The WITH MANAGED ACCESS option ensures that all privilege grants, including future grants on objects within the schema, are managed by the schema owner, thus restricting object owners from passing on access to other users1.

References =

•Snowflake Documentation on creating schemas1

•Snowflake Documentation on configuring access control2

•Snowflake Documentation on understanding and viewing Fail-safe3

A company has a Snowflake environment running in AWS us-west-2 (Oregon). The company needs to share data privately with a customer who is running their Snowflake environment in Azure East US 2 (Virginia).

What is the recommended sequence of operations that must be followed to meet this requirement?

Options:

1. Create a share and add the database privileges to the share2. Create a new listing on the Snowflake Marketplace3. Alter the listing and add the share4. Instruct the customer to subscribe to the listing on the Snowflake Marketplace

1. Ask the customer to create a new Snowflake account in Azure EAST US 2 (Virginia)2. Create a share and add the database privileges to the share3. Alter the share and add the customer's Snowflake account to the share

1. Create a new Snowflake account in Azure East US 2 (Virginia)2. Set up replication between AWS us-west-2 (Oregon) and Azure East US 2 (Virginia) for the database objects to be shared3. Create a share and add the database privileges to the share4. Alter the share and add the customer's Snowflake account to the share

1. Create a reader account in Azure East US 2 (Virginia)2. Create a share and add the database privileges to the share3. Add the reader account to the share4. Share the reader account's URL and credentials with the customer

Answer:

CExplanation:

Option C is the correct answer because it allows the company to share data privately with the customer across different cloud platforms and regions. The company can create a new Snowflake account in Azure East US 2 (Virginia) and set up replication between AWS us-west-2 (Oregon) and Azure East US 2 (Virginia) for the database objects to be shared. This way, the company can ensure that the data is always up to date and consistent in both accounts. The company can then create a share and add the database privileges to the share, and alter the share and add the customer’s Snowflake account to the share. The customer can then access the shared data from their own Snowflake account in Azure East US 2 (Virginia).

Option A is incorrect because the Snowflake Marketplace is not a private way of sharing data. The Snowflake Marketplace is a public data exchange platform that allows anyone to browse and subscribe to data sets from various providers. The company would not be able to control who can access their data if they use the Snowflake Marketplace.

Option B is incorrect because it requires the customer to create a new Snowflake account in Azure East US 2 (Virginia), which may not be feasible or desirable for the customer. The customer may already have an existing Snowflake account in a different cloud platform or region, and may not want to incur additional costs or complexity by creating a new account.

Option D is incorrect because it involves creating a reader account in Azure East US 2 (Virginia), which is a limited and temporary way of sharing data. A reader account is a special type of Snowflake account that can only access data from a single share, and has a fixed duration of 30 days. The company would have to manage the reader account’s URL and credentials, and renew the account every 30 days. The customer would not be able to use their own Snowflake account to access the shared data, and would have to rely on the company’s reader account.

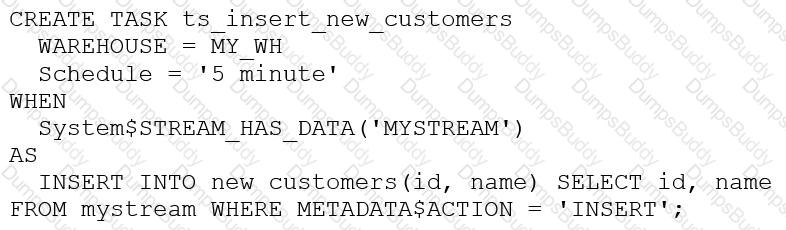

The following DDL command was used to create a task based on a stream:

Assuming MY_WH is set to auto_suspend – 60 and used exclusively for this task, which statement is true?

Options:

The warehouse MY_WH will be made active every five minutes to check the stream.

The warehouse MY_WH will only be active when there are results in the stream.

The warehouse MY_WH will never suspend.

The warehouse MY_WH will automatically resize to accommodate the size of the stream.

Answer:

BExplanation:

The warehouse MY_WH will only be active when there are results in the stream. This is because the task is created based on a stream, which means that the task will only be executed when there are new data in the stream. Additionally, the warehouse is set to auto_suspend - 60, which means that the warehouse will automatically suspend after 60 seconds of inactivity. Therefore, the warehouse will only be active when there are results in the stream. References:

[CREATE TASK | Snowflake Documentation]

[Using Streams and Tasks | Snowflake Documentation]

[CREATE WAREHOUSE | Snowflake Documentation]

An Architect is designing a solution that will be used to process changed records in an orders table. Newly-inserted orders must be loaded into the f_orders fact table, which will aggregate all the orders by multiple dimensions (time, region, channel, etc.). Existing orders can be updated by the sales department within 30 days after the order creation. In case of an order update, the solution must perform two actions:

1. Update the order in the f_0RDERS fact table.

2. Load the changed order data into the special table ORDER _REPAIRS.

This table is used by the Accounting department once a month. If the order has been changed, the Accounting team needs to know the latest details and perform the necessary actions based on the data in the order_repairs table.

What data processing logic design will be the MOST performant?

Options:

Useone stream and one task.

Useone stream and two tasks.

Usetwo streams and one task.

Usetwo streams and two tasks.

Answer:

BExplanation:

The most performant design for processing changed records, considering the need to both update records in thef_ordersfact table and load changes into theorder_repairstable, is to use one stream and two tasks. The stream will monitor changes in the orders table, capturing both inserts and updates. The first task would apply these changes to thef_ordersfact table, ensuring all dimensions are accurately represented. The second task would use the same stream to insert relevant changes into theorder_repairstable, which is critical for the Accounting department's monthly review. This method ensures efficient processing by minimizing the overhead of managing multiple streams and synchronizing between them, while also allowing specific tasks to optimize for their target operations.

What Snowflake system functions are used to view and or monitor the clustering metadata for a table? (Select TWO).

Options:

SYSTEMSCLUSTERING

SYSTEMSTABLE_CLUSTERING

SYSTEMSCLUSTERING_DEPTH

SYSTEMSCLUSTERING_RATIO

SYSTEMSCLUSTERING_INFORMATION

Answer:

C, EExplanation:

The Snowflake system functions used to view and monitor the clustering metadata for a table are:

SYSTEM$CLUSTERING_INFORMATION

SYSTEM$CLUSTERING_DEPTH

Comprehensive But Short Explanation:

TheSYSTEM$CLUSTERING_INFORMATIONfunction in Snowflake returns a variety of clustering information for a specified table. This information includes the average clustering depth, total number of micro-partitions, total constant partition count, average overlaps, average depth, and a partition depth histogram. This function allows you to specify either one or multiple columns for which the clustering information is returned, and it returns this data in JSON format.

TheSYSTEM$CLUSTERING_DEPTHfunction computes the average depth of a table based on specified columns or the clustering key defined for the table. A lower average depth indicates that the table is better clustered with respect to the specified columns. This function also allows specifying columns to calculate the depth, and the values need to be enclosed in single quotes.

What considerations need to be taken when using database cloning as a tool for data lifecycle management in a development environment? (Select TWO).

Options:

Any pipes in the source are not cloned.

Any pipes in the source referring to internal stages are not cloned.

Any pipes in the source referring to external stages are not cloned.

The clone inherits all granted privileges of all child objects in the source object, including the database.

The clone inherits all granted privileges of all child objects in the source object, excluding the database.

Answer:

A, CA company is designing its serving layer for data that is in cloud storage. Multiple terabytes of the data will be used for reporting. Some data does not have a clear use case but could be useful for experimental analysis. This experimentation data changes frequently and is sometimes wiped out and replaced completely in a few days.

The company wants to centralize access control, provide a single point of connection for the end-users, and maintain data governance.

What solution meets these requirements while MINIMIZING costs, administrative effort, and development overhead?

Options:

Import the data used for reporting into a Snowflake schema with native tables. Then create external tables pointing to the cloud storage folders used for the experimentation data. Then create two different roles with grants to the different datasets to match the different user personas, and grant these roles to the corresponding users.

Import all the data in cloud storage to be used for reporting into a Snowflake schema with native tables. Then create a role that has access to this schema and manage access to the data through that role.

Import all the data in cloud storage to be used for reporting into a Snowflake schema with native tables. Then create two different roles with grants to the different datasets to match the different user personas, and grant these roles to the corresponding users.

Import the data used for reporting into a Snowflake schema with native tables. Then create views that have SELECT commands pointing to the cloud storage files for the experimentation data. Then create two different roles to match the different user personas, and grant these roles to the corresponding users.

Answer:

AExplanation:

The most cost-effective and administratively efficient solution is to use a combination of native and external tables. Native tables for reporting data ensure performance and governance, while external tables allow for flexibility with frequently changing experimental data. Creating roles with specific grants to datasets aligns with the principle of least privilege, centralizing access control and simplifying user management12.

References

•Snowflake Documentation on Optimizing Cost1.

•Snowflake Documentation on Controlling Cost2.

An Architect is designing Snowflake architecture to support fast Data Analyst reporting. To optimize costs, the virtual warehouse is configured to auto-suspend after 2 minutes of idle time. Queries are run once in the morning after refresh, but later queries run slowly.

Why is this occurring?

Options:

The warehouse is not large enough.

The warehouse was not configured as a multi-cluster warehouse.

The warehouse was not created with USE_CACHE = TRUE.

When the warehouse was suspended, the cache was dropped.

Answer:

DExplanation:

Snowflake virtual warehouses maintain a local result and data cache only while the warehouse is running. When a warehouse is suspended—whether manually or via auto-suspend—the local cache is cleared. As a result, subsequent queries cannot benefit from cached data and must re-scan data from remote storage, leading to slower execution (Answer D).

Snowflake does maintain a global result cache at the cloud services layer, but it is only used when the exact same query text is re-executed and the underlying data has not changed. In many analytical workloads, queries vary slightly, preventing reuse of the result cache.

Warehouse size and multi-cluster configuration impact concurrency and throughput, not cache persistence. There is no USE_CACHE parameter in Snowflake. This question tests an architect’s understanding of Snowflake caching behavior and the tradeoff between aggressive auto-suspend for cost control and cache reuse for performance.

=========

QUESTION NO: 32 [Security and Access Management]

A company has two databases, DB1 and DB2.

Role R1 has SELECT on DB1.

Role R2 has SELECT on DB2.

Users should normally access only one database, but a small group must access both databases in the same query with minimal operational overhead.

What is the best approach?

A. Set DEFAULT_SECONDARY_ROLE to R2.

B. Grant R2 to users and use USE_SECONDARY_ROLES for SELECT.

C. Grant R2 to R1 to use privilege inheritance.

D. Grant R2 to users and require USE SECONDARY ROLES.

Answer: B

Snowflake supports secondary roles to allow users to activate additional privileges without changing their primary role. Granting R2 to the users and enabling USE_SECONDARY_ROLES for SELECT allows those users to access both DB1 and DB2 in a single query, while keeping their default role unchanged (Answer B).

This approach minimizes operational overhead because it avoids role restructuring or privilege inheritance changes. It also maintains least privilege by ensuring that users only activate additional access when needed. Setting a default secondary role applies automatically and may unintentionally broaden access. Granting R2 to R1 affects all users with R1, which violates the requirement to limit access to a small group.

This pattern is a common SnowPro Architect design for cross-database access control.

=========

QUESTION NO: 33 [Performance Optimization and Monitoring]

How can an Architect enable optimal clustering to enhance performance for different access paths on a given table?

A. Create multiple clustering keys for a table.

B. Create multiple materialized views with different cluster keys.

C. Create super projections that automatically create clustering.

D. Create a clustering key containing all access path columns.

Answer: B

Snowflake allows only one clustering key per table, which limits its effectiveness when multiple access paths exist. Creating a composite clustering key that includes many columns often leads to poor clustering depth and limited pruning.

Materialized views provide an effective alternative. Each materialized view can be clustered independently, allowing architects to tailor physical data organization to specific query patterns (Answer B). Queries targeting different access paths can then leverage the appropriate materialized view, achieving better pruning and performance.

Super projections are not a Snowflake feature. Creating multiple clustering keys on a single table is not supported. This question reinforces SnowPro Architect knowledge of advanced performance design techniques using materialized views.

=========

QUESTION NO: 34 [Cost Control and Resource Management]

An Architect configures the following timeouts and creates a task using a size X-Small warehouse. The task’s INSERT statement will take ~40 hours.

How long will the INSERT execute?

A. 1 minute

B. 5 minutes

C. 1 hour

D. 40 hours

Answer: A

Tasks in Snowflake are governed by the USER_TASK_TIMEOUT_MS parameter, which specifies the maximum execution time for a single task run. In this scenario, USER_TASK_TIMEOUT_MS = 60000, which equals 1 minute. This timeout applies regardless of account-, session-, or warehouse-level statement timeout settings.

Even though the account, session, and warehouse statement timeouts are higher, the task-specific timeout takes precedence for task execution. As a result, the INSERT statement will be terminated after 1 minute (Answer A).

This is a key SnowPro Architect concept: tasks have their own execution limits that override other timeout parameters. Architects must ensure that task timeouts are configured appropriately for long-running operations or redesign workloads to fit within task constraints.

=========

QUESTION NO: 35 [Snowflake Ecosystem and Integrations]

Several in-house applications need to connect to Snowflake without browser access or redirect capabilities.

What is the Snowflake best practice for authentication?

A. Use Snowflake OAuth.

B. Use usernames and passwords.

C. Use external OAuth.

D. Use key pair authentication with a service user.

Answer: D

For non-interactive, service-to-service authentication scenarios, Snowflake recommends key pair authentication using a service user (Answer D). This method avoids hardcoding passwords, supports automated rotation of credentials, and aligns with security best practices.

OAuth-based methods typically require browser redirects or user interaction, which are not available in this scenario. Username/password authentication introduces security risks and operational overhead.

Key pair authentication enables strong, certificate-based security and is widely used in SnowPro Architect designs for applications, ETL tools, and automated workloads.

When loading data into a table that captures the load time in a column with a default value of either CURRENT_TIME () or CURRENT_TIMESTAMP() what will occur?

Options:

All rows loaded using a specific COPY statement will have varying timestamps based on when the rows were inserted.

Any rows loaded using a specific COPY statement will have varying timestamps based on when the rows were read from the source.

Any rows loaded using a specific COPY statement will have varying timestamps based on when the rows were created in the source.

All rows loaded using a specific COPY statement will have the same timestamp value.

Answer:

DExplanation:

According to the Snowflake documentation, when loading data into a table that captures the load time in a column with a default value of either CURRENT_TIME () or CURRENT_TIMESTAMP(), the default value is evaluated once per COPY statement, not once per row. Therefore, all rows loaded using a specific COPY statement will have the same timestamp value. This behavior ensures that the timestamp value reflects the time when the data was loaded into the table, not when the data was read from the source or created in the source. References:

Snowflake Documentation: Loading Data into Tables with Default Values

Snowflake Documentation: COPY INTO table

Two queries are run on the customer_address table:

create or replace TABLE CUSTOMER_ADDRESS ( CA_ADDRESS_SK NUMBER(38,0), CA_ADDRESS_ID VARCHAR(16), CA_STREET_NUMBER VARCHAR(IO) CA_STREET_NAME VARCHAR(60), CA_STREET_TYPE VARCHAR(15), CA_SUITE_NUMBER VARCHAR(10), CA_CITY VARCHAR(60), CA_COUNTY

VARCHAR(30), CA_STATE VARCHAR(2), CA_ZIP VARCHAR(10), CA_COUNTRY VARCHAR(20), CA_GMT_OFFSET NUMBER(5,2), CA_LOCATION_TYPE

VARCHAR(20) );

ALTER TABLE DEMO_DB.DEMO_SCH.CUSTOMER_ADDRESS ADD SEARCH OPTIMIZATION ON SUBSTRING(CA_ADDRESS_ID);

Which queries will benefit from the use of the search optimization service? (Select TWO).

Options:

select * from DEMO_DB.DEMO_SCH.CUSTOMER_ADDRESS Where substring(CA_ADDRESS_ID,1,8)= substring('AAAAAAAAPHPPLBAAASKDJHASLKDJHASKJD',1,8);

select * from DEMO_DB.DEMO_SCH.CUSTOMER_ADDRESS Where CA_ADDRESS_ID= substring('AAAAAAAAPHPPLBAAASKDJHASLKDJHASKJD',1,16);

select*fromDEMO_DB.DEMO_SCH.CUSTOMER_ADDRESSWhereCA_ADDRESS_IDLIKE ’%BAAASKD%';

select*fromDEMO_DB.DEMO_SCH.CUSTOMER_ADDRESSWhereCA_ADDRESS_IDLIKE '%PHPP%';

select*fromDEMO_DB.DEMO_SCH.CUSTOMER_ADDRESSWhereCA_ADDRESS_IDNOT LIKE '%AAAAAAAAPHPPL%';

Answer:

A, BExplanation:

The use of the search optimization service in Snowflake is particularly effective when queries involve operations that match exact substrings or start from the beginning of a string. The ALTER TABLE command adding search optimization specifically for substrings on theCA_ADDRESS_IDfield allows the service to create an optimized search path for queries using substring matches.

Option Abenefits because it directly matches a substring from the start of theCA_ADDRESS_ID, aligning with the optimization's capability to quickly locate records based on the beginning segments of strings.

Assuming all Snowflake accounts are using an Enterprise edition or higher, in which development and testing scenarios would be copying of data be required, and zero-copy cloning not be suitable? (Select TWO).

Options:

Developers create their own datasets to work against transformed versions of the live data.

Production and development run in different databases in the same account, and Developers need to see production-like data but with specific columns masked.

Data is in a production Snowflake account that needs to be provided to Developers in a separate development/testing Snowflake account in the same cloud region.

Developers create their own copies of a standard test database previously created for them in the development account, for their initial development and unit testing.

The release process requires pre-production testing of changes with data of production scale and complexity. For security reasons, pre-production also runs in the production account.

Answer:

B, CExplanation:

https://docs.snowflake.com/en/user-guide/tag-based-masking-policies#considerations

Which of the following are characteristics of Snowflake’s parameter hierarchy?

Options:

Session parameters override virtual warehouse parameters.

Virtual warehouse parameters override user parameters.

Table parameters override virtual warehouse parameters.

Schema parameters override account parameters.

Answer:

BExplanation:

In Snowflake's parameter hierarchy, virtual warehouse parameters take precedence over user parameters. This hierarchy is designed to ensure that settings at the virtual warehouse level, which typically reflect the requirements of a specific workload or set of queries, override the preferences set at the individual user level. This helps maintain consistent performance and resource utilization as specified by the administrators managing the virtual warehouses.

A Developer is having a performance issue with a Snowflake query. The query receives up to 10 different values for one parameter and then performs an aggregation over the majority of a fact table. It then

joins against a smaller dimension table. This parameter value is selected by the different query users when they execute it during business hours. Both the fact and dimension tables are loaded with new data in an overnight import process.

On a Small or Medium-sized virtual warehouse, the query performs slowly. Performance is acceptable on a size Large or bigger warehouse. However, there is no budget to increase costs. The Developer

needs a recommendation that does not increase compute costs to run this query.

What should the Architect recommend?

Options:

Create a task that will run the 10 different variations of the query corresponding to the 10 different parameters before the users come in to work. The query results will then be cached and ready to respond quickly when the users re-issue the query.

Create a task that will run the 10 different variations of the query corresponding to the 10 different parameters before the users come in to work. The task will be scheduled to align with the users' working hours in order to allow the warehouse cache to be used.

Enable the search optimization service on the table. When the users execute the query, the search optimization service will automatically adjust the query execution plan based on the frequently-used parameters.

Create a dedicated size Large warehouse for this particular set of queries. Create a new role that has USAGE permission on this warehouse and has the appropriate read permissions over the fact and dimension tables. Have users switch to this role and use this warehouse when they want to access this data.

Answer:

CExplanation:

Enabling the search optimization service on the table can improve the performance of queries that have selective filtering criteria, which seems to be the case here. This service optimizes the execution of queries by creating a persistent data structure called a search access path, which allows some micro-partitions to be skipped during the scanning process. This can significantly speed up query performance without increasing compute costs1.

References

•Snowflake Documentation on Search Optimization Service1.

A Data Engineer is designing a near real-time ingestion pipeline for a retail company to ingest event logs into Snowflake to derive insights. A Snowflake Architect is asked to define security best practices to configure access control privileges for the data load for auto-ingest to Snowpipe.

What are the MINIMUM object privileges required for the Snowpipe user to execute Snowpipe?

Options:

OWNERSHIP on the named pipe, USAGE on the named stage, target database, and schema, and INSERT and SELECT on the target table

OWNERSHIP on the named pipe, USAGE and READ on the named stage, USAGE on the target database and schema, and INSERT end SELECT on the target table

CREATE on the named pipe, USAGE and READ on the named stage, USAGE on the target database and schema, and INSERT end SELECT on the target table

USAGE on the named pipe, named stage, target database, and schema, and INSERT and SELECT on the target table

Answer:

BExplanation:

According to the SnowPro Advanced: Architect documents and learning resources, the minimum object privileges required for the Snowpipe user to execute Snowpipe are:

OWNERSHIP on the named pipe. This privilege allows the Snowpipe user to create, modify, and drop the pipe object that defines the COPY statement for loading data from the stage to the table1.

USAGE and READ on the named stage. These privileges allow the Snowpipe user to access and read the data files from the stage that are loaded by Snowpipe2.

USAGE on the target database and schema. These privileges allow the Snowpipe user to access the database and schema that contain the target table3.

INSERT and SELECT on the target table. These privileges allow the Snowpipe user to insert data into the table and select data from the table4.

The other options are incorrect because they do not specify the minimum object privileges required for the Snowpipe user to execute Snowpipe. Option A is incorrect because it does not include the READ privilege on the named stage, which is required for the Snowpipe user to read the data files from the stage. Option C is incorrect because it does not include the OWNERSHIP privilege on the named pipe, which is required for the Snowpipe user to create, modify, and drop the pipe object. Option D is incorrect because it does not include the OWNERSHIP privilege on the named pipe or the READ privilege on the named stage, which are both required for the Snowpipe user to execute Snowpipe. References: CREATE PIPE | Snowflake Documentation, CREATE STAGE | Snowflake Documentation, CREATE DATABASE | Snowflake Documentation, CREATE TABLE | Snowflake Documentation

Which Snowflake objects can be used in a data share? (Select TWO).

Options:

Standard view

Secure view

Stored procedure

External table

Stream

Answer:

B, DExplanation:

https://docs.snowflake.com/en/user-guide/data-sharing-intro

An Architect has designed a data pipeline that Is receiving small CSV files from multiple sources. All of the files are landing in one location. Specific files are filtered for loading into Snowflake tables using the copy command. The loading performance is poor.

What changes can be made to Improve the data loading performance?

Options:

Increase the size of the virtual warehouse.

Create a multi-cluster warehouse and merge smaller files to create bigger files.

Create a specific storage landing bucket to avoid file scanning.

Change the file format from CSV to JSON.

Answer:

BExplanation:

According to the Snowflake documentation, the data loading performance can be improved by following some best practices and guidelines for preparing and staging the data files. One of the recommendations is to aim for data files that are roughly 100-250 MB (or larger) in size compressed, as this will optimize the number of parallel operations for a load. Smaller files should be aggregated and larger files should be split to achieve this size range. Another recommendation is to use a multi-cluster warehouse for loading, as this will allow for scaling up or out the compute resources depending on the load demand. A single-cluster warehouse may not be able to handle the load concurrency and throughput efficiently. Therefore, by creating a multi-cluster warehouse and merging smaller files to create bigger files, the data loading performance can be improved. References:

Data Loading Considerations

Preparing Your Data Files

Planning a Data Load

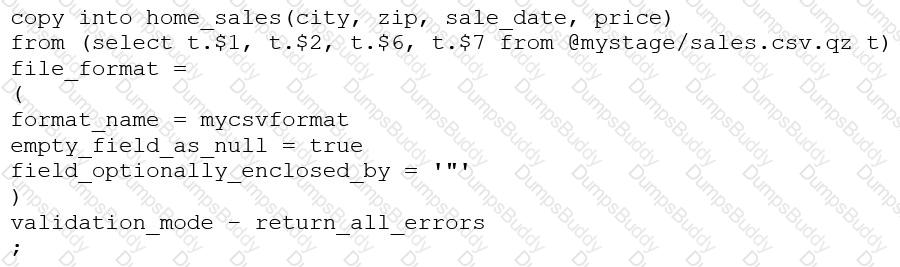

Consider the following COPY command which is loading data with CSV format into a Snowflake table from an internal stage through a data transformation query.

This command results in the following error:

SQL compilation error: invalid parameter 'validation_mode'

Assuming the syntax is correct, what is the cause of this error?

Options:

The VALIDATION_MODE parameter supports COPY statements that load data from external stages only.

The VALIDATION_MODE parameter does not support COPY statements with CSV file formats.

The VALIDATION_MODE parameter does not support COPY statements that transform data during a load.

The value return_all_errors of the option VALIDATION_MODE is causing a compilation error.

Answer:

CExplanation:

The VALIDATION_MODE parameter is used to specify the behavior of the COPY statement when loading data into a table. It is used to specify whether the COPY statement should return an error if any of the rows in the file are invalid or if it should continue loading the valid rows. The VALIDATION_MODE parameter is only supported for COPY statements that load data from external stages1.

The query in the question uses a data transformation query to load data from an internal stage. A data transformation query is a query that transforms the data during the load process, such as parsing JSON or XML data, applying functions, or joining with other tables2.

According to the documentation, VALIDATION_MODE does not support COPY statements that transform data during a load. If the parameter is specified, the COPY statement returns an error1. Therefore, option C is the correct answer.

COPY INTO <title> : Transforming Data During a Load

Which technique will efficiently ingest and consume semi-structured data for Snowflake data lake workloads?

Options:

IDEF1X

Schema-on-write

Schema-on-read

Information schema

Answer:

CExplanation:

Option C is the correct answer because schema-on-read is a technique that allows Snowflake to ingest and consume semi-structured data without requiring a predefined schema. Snowflake supports various semi-structured data formats such as JSON, Avro, ORC, Parquet, and XML, and provides native data types (ARRAY, OBJECT, and VARIANT) for storing them. Snowflake also provides native support for querying semi-structured data using SQL and dot notation. Schema-on-read enables Snowflake to query semi-structured data at the same speed as performing relational queries while preserving the flexibility of schema-on-read. Snowflake’s near-instant elasticity rightsizes compute resources, and consumption-based pricing ensures you only pay for what you use.

Option A is incorrect because IDEF1X is a data modeling technique that defines the structure and constraints of relational data using diagrams and notations. IDEF1X is not suitable for ingesting and consuming semi-structured data, which does not have a fixed schema or structure.

Option B is incorrect because schema-on-write is a technique that requires defining a schema before loading and processing data. Schema-on-write is not efficient for ingesting and consuming semi-structured data, which may have varying or complex structures that are difficult to fit into a predefined schema. Schema-on-write also introduces additional overhead and complexity for data transformation and validation.

Option D is incorrect because information schema is a set of metadata views that provide information about the objects and privileges in a Snowflake database. Information schema is not a technique for ingesting and consuming semi-structured data, but rather a way of accessing metadata about the data.

A retailer's enterprise data organization is exploring the use of Data Vault 2.0 to model its data lake solution. A Snowflake Architect has been asked to provide recommendations for using Data Vault 2.0 on Snowflake.

What should the Architect tell the data organization? (Select TWO).

Options:

Change data capture can be performed using the Data Vault 2.0 HASH_DIFF concept.

Change data capture can be performed using the Data Vault 2.0 HASH_DELTA concept.

Using the multi-table insert feature in Snowflake, multiple Point-in-Time (PIT) tables can be loaded in parallel from a single join query from the data vault.

Using the multi-table insert feature, multiple Point-in-Time (PIT) tables can be loaded sequentially from a single join query from the data vault.

There are performance challenges when using Snowflake to load multiple Point-in-Time (PIT) tables in parallel from a single join query from the data vault.

Answer:

A, CExplanation:

Data Vault 2.0 on Snowflake supports the HASH_DIFF concept for change data capture, which is a method to detect changes in the data by comparing the hash values of the records. Additionally, Snowflake’s multi-table insert feature allows for the loading of multiple PIT tables in parallel from a single join query, which can significantly streamline the data loading process and improve performance1.

References =

•Snowflake’s documentation on multi-table inserts1

•Blog post on optimizing Data Vault architecture on Snowflake2

An Architect needs to design a Snowflake account and database strategy to store and analyze large amounts of structured and semi-structured data. There are many business units and departments within the company. The requirements are scalability, security, and cost efficiency.

What design should be used?

Options:

Create a single Snowflake account and database for all data storage and analysis needs, regardless of data volume or complexity.

Set up separate Snowflake accounts and databases for each department or business unit, to ensure data isolation and security.

Use Snowflake's data lake functionality to store and analyze all data in a central location, without the need for structured schemas or indexes

Use a centralized Snowflake database for core business data, and use separate databases for departmental or project-specific data.

Answer:

DExplanation:

The best design to store and analyze large amounts of structured and semi-structured data for different business units and departments is to use a centralized Snowflake database for core business data, and use separate databases for departmental or project-specific data. This design allows for scalability, security, and cost efficiency by leveraging Snowflake’s features such as:

Database cloning: Cloning a database creates a zero-copy clone that shares the same data files as the original database, but can be modified independently. This reduces storage costs and enables fast and consistent data replication for different purposes.

Database sharing: Sharing a database allows granting secure and governed access to a subset of data in a database to other Snowflake accounts or consumers. This enables data collaboration and monetization across different business units or external partners.

Warehouse scaling: Scaling a warehouse allows adjusting the size and concurrency of a warehouse to match the performance and cost requirements of different workloads. This enables optimal resource utilization and flexibility for different data analysis needs. References: Snowflake Documentation: Database Cloning, Snowflake Documentation: Database Sharing, [Snowflake Documentation: Warehouse Scaling]

A Snowflake Architect is designing a multi-tenant application strategy for an organization in the Snowflake Data Cloud and is considering using an Account Per Tenant strategy.

Which requirements will be addressed with this approach? (Choose two.)

Options:

There needs to be fewer objects per tenant.

Security and Role-Based Access Control (RBAC) policies must be simple to configure.

Compute costs must be optimized.

Tenant data shape may be unique per tenant.

Storage costs must be optimized.

Answer:

B, DExplanation:

The Account Per Tenant strategy involves creating separate Snowflake accounts for each tenant within the multi-tenant application. This approach offers a number of advantages.

Option B:With separate accounts, each tenant's environment is isolated, making security and RBAC policies simpler to configure and maintain. This is because each account can have its own set of roles and privileges without the risk of cross-tenant access or the complexity of maintaining a highly granular permission model within a shared environment.

Option D:This approach also allows for each tenant to have a unique data shape, meaning that the database schema can be tailored to the specific needs of each tenant without affecting others. This can be essential when tenants have different data models, usage patterns, or application customizations.

An Architect Is designing a data lake with Snowflake. The company has structured, semi-structured, and unstructured data. The company wants to save the data inside the data lake within the Snowflake system. The company is planning on sharing data among Its corporate branches using Snowflake data sharing.

What should be considered when sharing the unstructured data within Snowflake?

Options:

A pre-signed URL should be used to save the unstructured data into Snowflake in order to share data over secure views, with no time limit for the URL.

A scoped URL should be used to save the unstructured data into Snowflake in order to share data over secure views, with a 24-hour time limit for the URL.

A file URL should be used to save the unstructured data into Snowflake in order to share data over secure views, with a 7-day time limit for the URL.

A file URL should be used to save the unstructured data into Snowflake in order to share data over secure views, with the "expiration_time" argument defined for the URL time limit.

Answer:

DExplanation:

According to the Snowflake documentation, unstructured data files can be shared by using a secure view and Secure Data Sharing. A secure view allows the result of a query to be accessed like a table, and a secure view is specifically designated for data privacy. A scoped URL is an encoded URL that permits temporary access to a staged file without granting privileges to the stage. The URL expires when the persisted query result period ends, which is currently 24 hours. A scoped URL is recommended for file administrators to give scoped access to data files to specific roles in the same account. Snowflake records information in the query history about who uses a scoped URL to access a file, and when. Therefore, a scoped URL is the best option to share unstructured data within Snowflake, as it provides security, accountability, and control over the data access. References:

Sharing unstructured Data with a secure view

Introduction to Loading Unstructured Data

What Snowflake features should be leveraged when modeling using Data Vault?

Options:

Snowflake’s support of multi-table inserts into the data model’s Data Vault tables

Data needs to be pre-partitioned to obtain a superior data access performance

Scaling up the virtual warehouses will support parallel processing of new source loads

Snowflake’s ability to hash keys so that hash key joins can run faster than integer joins

Answer:

AExplanation:

These two features are relevant for modeling using Data Vault on Snowflake. Data Vault is a data modeling approach that organizes data into hubs, links, and satellites. Data Vault is designed to enable high scalability, flexibility, and performance for data integration and analytics. Snowflake is a cloud data platform that supports various data modeling techniques, including Data Vault. Snowflake provides some features that can enhance the Data Vault modeling, such as:

Snowflake’s support of multi-table inserts into the data model’s Data Vault tables. Multi-table inserts (MTI) are a feature that allows inserting data from a single query into multiple tables in a single DML statement. MTI can improve the performance and efficiency of loading data into Data Vault tables, especially for real-time or near-real-time data integration. MTI can also reduce the complexity and maintenance of the loading code, as well as the data duplication and latency12.

Scaling up the virtual warehouses will support parallel processing of new source loads. Virtual warehouses are a feature that allows provisioning compute resources on demand for data processing. Virtual warehouses can be scaled up or down by changing the size of the warehouse, which determines the number of servers in the warehouse. Scaling up the virtual warehouses can improve the performance and concurrency of processing new source loads into Data Vault tables, especially for large or complex data sets. Scaling up the virtual warehouses can also leverage the parallelism and distribution of Snowflake’s architecture, which can optimize the data loading and querying34.

Snowflake Documentation: Multi-table Inserts

Snowflake Blog: Tips for Optimizing the Data Vault Architecture on Snowflake

Snowflake Documentation: Virtual Warehouses

Snowflake Blog: Building a Real-Time Data Vault in Snowflake

An Architect is designing a file ingestion recovery solution. The project will use an internal named stage for file storage. Currently, in the case of an ingestion failure, the Operations team must manually download the failed file and check for errors.

Which downloading method should the Architect recommend that requires the LEAST amount of operational overhead?

Options:

Use the Snowflake Connector for Python, connect to remote storage and download the file.

Use the get command in SnowSQL to retrieve the file.

Use the get command in Snowsight to retrieve the file.

Use the Snowflake API endpoint and download the file.

Answer:

BExplanation:

The get command in SnowSQL is a convenient way to download files from an internal stage to a local directory. The get command can be used in interactive mode or in a script, and it supports wildcards and parallel downloads. The get command also allows specifying the overwrite option, which determines how to handle existing files with the same name2

The Snowflake Connector for Python, the Snowflake API endpoint, and the get command in Snowsight are not recommended methods for downloading files from an internal stage, because they require more operational overhead than the get command in SnowSQL. The Snowflake Connector for Python and the Snowflake API endpoint require writing and maintaining code to handle the connection, authentication, and file transfer. The get command in Snowsight requires using the web interface and manually selecting the files to download34 References:

1: SnowPro Advanced: Architect | Study Guide

2: Snowflake Documentation | Using the GET Command

3: Snowflake Documentation | Using the Snowflake Connector for Python

4: Snowflake Documentation | Using the Snowflake API

Snowflake Documentation | Using the GET Command in Snowsight

SnowPro Advanced: Architect | Study Guide

Using the GET Command

Using the Snowflake Connector for Python

Using the Snowflake API

[Using the GET Command in Snowsight]

When using the COPY INTO

command with the CSV file format, how does the MATCH_BY_COLUMN_NAME parameter behave?

Options:

It expects a header to be present in the CSV file, which is matched to a case-sensitive table column name.

The parameter will be ignored.

The command will return an error.

The command will return a warning stating that the file has unmatched columns.

Answer:

CExplanation:

Comprehensive and Detailed Explanation From Exact Extract:

The MATCH_BY_COLUMN_NAME parameter in the COPY INTO

command is used to load semi-structured or structured data, such as CSV, into columns of the target table by matching column names in the data file with those in the table. For CSV files, this parameter requires specific conditions to be met, particularly the presence of a header row in the file, which is used to map columns to the target table.

According to the official Snowflake documentation, when the MATCH_BY_COLUMN_NAME parameter is used with CSV files, it is only supported in specific scenarios and requires the PARSE_HEADER file format option to be set to TRUE. This option indicates that the first row of the CSV file contains column headers, which Snowflake uses to match with the target table's column names. The matching behavior can be configured as CASE_SENSITIVE or CASE_INSENSITIVE, but the default behavior is case-sensitive unless specified otherwise.

However, there is a critical limitation when using MATCH_BY_COLUMN_NAME with CSV files: as of the latest Snowflake documentation, this feature is in Open Private Preview for CSV files and is not generally available for all accounts. When the MATCH_BY_COLUMN_NAME parameter is specified for a CSV file in an environment where this feature is not enabled, or if the PARSE_HEADER option is not set to TRUE, the COPY INTO command will return an error. This is because Snowflake cannot process the column name matching without the header parsing capability, which is not fully supported for CSV files in general availability.

The exact extract from the Snowflake documentation states:

"For loading CSV files, the MATCH_BY_COLUMN_NAME copy option is available in preview. It requires the use of the above-mentioned CSV file format option PARSE_HEADER = TRUE."

Additionally, the documentation clarifies:

"Boolean that specifies whether to use the first row headers in the data files to determine column names. This file format option is applied to the following actions only: Automatically detecting column definitions by using the INFER_SCHEMA function. Loading CSV data into separate columns by using the INFER_SCHEMA function and MATCH_BY_COLUMN_NAME copy option."

Furthermore, a known issue is noted:

"For CSV only, there is a known issue when the INCLUDE_METADATA copy option is used with MATCH_BY_COLUMN_NAME. Do not use this copy option when loading CSV files until the known issue is resolved."

Given that the MATCH_BY_COLUMN_NAME parameter is not fully supported for CSV files in general availability and requires specific preview conditions, attempting to use it without meeting those conditions, such as PARSE_HEADER = TRUE or enabling the preview feature, results in an error. Therefore, option C is correct: The command will return an error.

Option A is incorrect because, while MATCH_BY_COLUMN_NAME expects a header in the CSV file for matching when the feature is enabled, the case-sensitive matching is only true when explicitly set to CASE_SENSITIVE. Additionally, the feature's limited availability means it is not guaranteed to work without causing an error. Option B is incorrect because the parameter is not simply ignored; it triggers an error if the conditions are not met. Option D is incorrect because Snowflake does not issue a warning for unmatched columns in this context; it fails with an error when the parameter is unsupported or misconfigured.

When loading data from stage using COPY INTO, what options can you specify for the ON_ERROR clause?

Options:

CONTINUE

SKIP_FILE

ABORT_STATEMENT

FAIL

Answer:

A, B, CExplanation:

The ON_ERROR clause is an optional parameter for the COPY INTO command that specifies the behavior of the command when it encounters errors in the files. The ON_ERROR clause can have one of the following values1:

CONTINUE: This value instructs the command to continue loading the file and return an error message for a maximum of one error encountered per data file. The difference between the ROWS_PARSED and ROWS_LOADED column values represents the number of rows that include detected errors. To view all errors in the data files, use the VALIDATION_MODE parameter or query the VALIDATE function1.

SKIP_FILE: This value instructs the command to skip the file when it encounters a data error on any of the records in the file. The command moves on to the next file in the stage and continues loading. The skipped file is not loaded and no error message is returned for the file1.

ABORT_STATEMENT: This value instructs the command to stop loading data when the first error is encountered. The command returns an error message for the file and aborts the load operation. This is the default value for the ON_ERROR clause1.

Therefore, options A, B, and C are correct.

COPY INTO <title>

Is it possible for a data provider account with a Snowflake Business Critical edition to share data with an Enterprise edition data consumer account?

Options:

A Business Critical account cannot be a data sharing provider to an Enterprise consumer. Any consumer accounts must also be Business Critical.

If a user in the provider account with role authority to create or alter share adds an Enterprise account as a consumer, it can import the share.

If a user in the provider account with a share owning role sets share_restrictions to False when adding an Enterprise consumer account, it can import the share.

If a user in the provider account with a share owning role which also has override share restrictions privilege share_restrictions set to False when adding an Enterprise consumer account, it can import the share.

Answer:

DExplanation:

When a SnowflakeBusiness Critical (BC)edition account shares data, it must followdata sharing restrictionsdesigned to maintain thehigher level of compliance and securityguaranteed by BC.

Bydefault, BC accounts canonly share data with other BC (or higher)accounts, to maintain consistent security and compliance (e.g., HIPAA, HITRUST, FedRAMP).

However,an exceptioncan be madeif a user with the proper privilegeexplicitly disables the restriction.

Key Concept: share_restrictions

Snowflake enforcesdata sharing restrictionsby default forBC accounts.

Arole with the OVERRIDE SHARE RESTRICTIONS global privilegecan bypass this by setting theshare_restrictions = FALSEwhen adding the target account.

Correct Option: D

This is correct because:

The usermust have a role with the OVERRIDE SHARE RESTRICTIONS privilege.

That user can thenset share_restrictions = FALSEwhen adding the Enterprise edition consumer account.

Official Documentation Extract:

"If the data provider is a Business Critical (or higher) account, Snowflake enforces a restriction by default that only allows sharing data with other Business Critical (or higher) accounts. A user in the provider account with a role that has the global privilege OVERRIDE SHARE RESTRICTIONS can override this restriction by explicitly setting SHARE_RESTRICTIONS = FALSE when adding the consumer account."

Source:Snowflake Docs – CREATE SHARE

Why Other Options Are Incorrect:

A.Incorrect – This is not absolute. Business Critical accountscanshare data with Enterprise accounts,if the restriction is explicitly overridden.

B.Incorrect – Simply having authority to create or alter a share isnot enough. You must have the OVERRIDE SHARE RESTRICTIONS privilege and set the restriction explicitly.

C.Incorrect – Setting share_restrictions = FALSE is required, but theprivilege to overridemust also be held by the role. Without the privilege, the action will fail.

What transformations are supported in the below SQL statement? (Select THREE).

CREATE PIPE ... AS COPY ... FROM (...)

Options:

Data can be filtered by an optional where clause.

Columns can be reordered.

Columns can be omitted.

Type casts are supported.

Incoming data can be joined with other tables.

The ON ERROR - ABORT statement command can be used.

Answer:

A, B, CExplanation:

The SQL statement is a command for creating a pipe in Snowflake, which is an object that defines the COPY INTO <title> statement used by Snowpipe to load data from an ingestion queue into tables1. The statement uses a subquery in the FROM clause to transform the data from the staged files before loading it into the table2.

The transformations supported in the subquery are as follows2:

Data can be filtered by an optional WHERE clause, which specifies a condition that must be satisfied by the rows returned by the subquery. For example:

SQLAI-generated code. Review and use carefully. More info on FAQ.

createpipe mypipeas

copyintomytable

from(

select*from@mystage

wherecol1='A'andcol2>10

);

Columns can be reordered, which means changing the order of the columns in the subquery to match the order of the columns in the target table. For example:

SQLAI-generated code. Review and use carefully. More info on FAQ.

createpipe mypipeas

copyintomytable (col1, col2, col3)

from(

selectcol3, col1, col2from@mystage

);

Columns can be omitted, which means excluding some columns from the subquery that are not needed in the target table. For example:

SQLAI-generated code. Review and use carefully. More info on FAQ.

createpipe mypipeas

copyintomytable (col1, col2)

from(

selectcol1, col2from@mystage

);

The other options are not supported in the subquery because2:

Type casts are not supported, which means changing the data type of a column in the subquery. For example, the following statement will cause an error:

SQLAI-generated code. Review and use carefully. More info on FAQ.

createpipe mypipeas

copyintomytable (col1, col2)

from(

selectcol1::date, col2from@mystage

);

Incoming data can not be joined with other tables, which means combining the data from the staged files with the data from another table in the subquery. For example, the following statement will cause an error:

SQLAI-generated code. Review and use carefully. More info on FAQ.

createpipe mypipeas

copyintomytable (col1, col2, col3)

from(

selects.col1, s.col2, t.col3from@mystages

joinothertable tons.col1=t.col1

);

The ON ERROR - ABORT statement command can not be used, which means aborting the entire load operation if any error occurs. This command can only be used in the COPY INTO <title> statement, not in the subquery. For example, the following statement will cause an error:

SQLAI-generated code. Review and use carefully. More info on FAQ.

createpipe mypipeas

copyintomytable

from(

select*from@mystage

onerror abort

);

1: CREATE PIPE | Snowflake Documentation

2: Transforming Data During a Load | Snowflake Documentation

An Architect needs to automate the daily Import of two files from an external stage into Snowflake. One file has Parquet-formatted data, the other has CSV-formatted data.

How should the data be joined and aggregated to produce a final result set?

Options:

Use Snowpipe to ingest the two files, then create a materialized view to produce the final result set.

Create a task using Snowflake scripting that will import the files, and then call a User-Defined Function (UDF) to produce the final result set.

Create a JavaScript stored procedure to read. join, and aggregate the data directly from the external stage, and then store the results in a table.

Create a materialized view to read, Join, and aggregate the data directly from the external stage, and use the view to produce the final result set

Answer:

BExplanation:

According to the Snowflake documentation, tasks are objects that enable scheduling and execution of SQL statements or JavaScript user-defined functions (UDFs) in Snowflake. Tasks can be used to automate data loading, transformation, and maintenance operations. Snowflake scripting is a feature that allows writing procedural logic using SQL statements and JavaScript UDFs. Snowflake scripting can be used to create complex workflows and orchestrate tasks. Therefore, the best option to automate the daily import of two files from an external stage into Snowflake, join and aggregate the data, and produce a final result set is to create a task using Snowflake scripting that will import the files using the COPY INTO command, and then call a UDF to perform the join and aggregation logic. The UDF can return a table or a variant value as the final result set. References:

Tasks

Snowflake Scripting

User-Defined Functions

What are some of the characteristics of result set caches? (Choose three.)

Options:

Time Travel queries can be executed against the result set cache.

Snowflake persists the data results for 24 hours.

Each time persisted results for a query are used, a 24-hour retention period is reset.

The data stored in the result cache will contribute to storage costs.

The retention period can be reset for a maximum of 31 days.

The result set cache is not shared between warehouses.

Answer:

B, C, FExplanation:

In Snowflake, the characteristics of result set caches include persistence of data results for 24 hours (B), each use of persisted results resets the 24-hour retention period (C), and result set caches are not shared between different warehouses (F). The result set cache is specifically designed to avoid repeated execution of the same query within this timeframe, reducing computational overhead and speeding up query responses. These caches do not contribute to storage costs, and their retention period cannot be extended beyond the default duration nor up to 31 days, as might be misconstrued.

A company is using Snowflake in Azure in the Netherlands. The company analyst team also has data in JSON format that is stored in an Amazon S3 bucket in the AWS Singapore region that the team wants to analyze.

The Architect has been given the following requirements:

1. Provide access to frequently changing data

2. Keep egress costs to a minimum

3. Maintain low latency

How can these requirements be met with the LEAST amount of operational overhead?

Options:

Use a materialized view on top of an external table against the S3 bucket in AWS Singapore.

Use an external table against the S3 bucket in AWS Singapore and copy the data into transient tables.

Copy the data between providers from S3 to Azure Blob storage to collocate, then use Snowpipe for data ingestion.

Use AWS Transfer Family to replicate data between the S3 bucket in AWS Singapore and an Azure Netherlands Blob storage, then use an external table against the Blob storage.

Answer:

AExplanation:

Option A is the best design to meet the requirements because it uses a materialized view on top of an external table against the S3 bucket in AWS Singapore. A materialized view is a database object that contains the results of a query and can be refreshed periodically to reflect changes in the underlying data1. An external table is a table that references data files stored in a cloud storage service, such as Amazon S32. By using a materialized view on top of an external table, the company can provide access to frequently changing data, keep egress costs to a minimum, and maintain low latency. This is because the materialized view will cache the query results in Snowflake, reducing the need to access the external data files and incur network charges. The materialized view will also improve the query performance by avoiding scanning the external data files every time. The materialized view can be refreshed on a schedule or on demand to capture the changes in the external data files1.

Option B is not the best design because it uses an external table against the S3 bucket in AWS Singapore and copies the data into transient tables. A transient table is a tablethat is not subject to the Time Travel and Fail-safe features of Snowflake, and is automatically purged after a period of time3. By using an external table and copying the data into transient tables, the company will incur more egress costs and operational overhead than using a materialized view. This is because the external table will access the external data files every time a query is executed, and the copy operation will also transfer data from S3 to Snowflake. The transient tables will also consume more storage space in Snowflake and require manual maintenance to ensure they are up to date.

Option C is not the best design because it copies the data between providers from S3 to Azure Blob storage to collocate, then uses Snowpipe for data ingestion. Snowpipe is a service that automates the loading of data from external sources into Snowflake tables4. By copying the data between providers, the company will incur high egress costs and latency, as well as operational complexity and maintenance of the infrastructure. Snowpipe will also add another layer of processing and storage in Snowflake, which may not be necessary if the external data files are already in a queryable format.

Option D is not the best design because it uses AWS Transfer Family to replicate data between the S3 bucket in AWS Singapore and an Azure Netherlands Blob storage, then uses an external table against the Blob storage. AWS Transfer Family is a service that enables secure and seamless transfer of files over SFTP, FTPS, and FTP to and from Amazon S3 or Amazon EFS5. By using AWS Transfer Family, the company will incur high egress costs and latency, as well as operational complexity and maintenance of the infrastructure. The external table will also access the external data files every time a query is executed, which may affect the query performance.

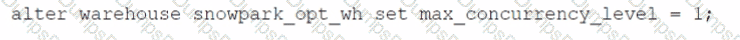

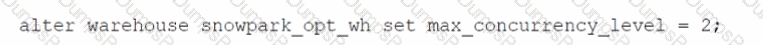

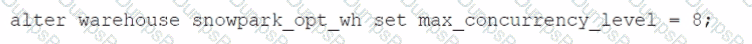

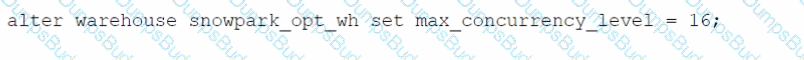

Which SQL ALTER command will MAXIMIZE memory and compute resources for a Snowpark stored procedure when executed on the snowpark_opt_wh warehouse?

Options:

ALTER WAREHOUSE snowpark_opt_wh SET MAX_CONCURRENCY_LEVEL = 1;

ALTER WAREHOUSE snowpark_opt_wh SET MAX_CONCURRENCY_LEVEL = 2;

ALTER WAREHOUSE snowpark_opt_wh SET MAX_CONCURRENCY_LEVEL = 8;

ALTER WAREHOUSE snowpark_opt_wh SET MAX_CONCURRENCY_LEVEL = 16;

Answer:

AExplanation: